Last Updated on September 12, 2022

You can retry a lock in loop and back-off the retries as a linear or exponential function of the number of failed retry attempts.

In this tutorial you will discover how to develop mutex lock retries with a back-off function.

Let’s get started.

Problem of Lock Contention

Lock contention is a problem that may occur when using mutual exclusion (mutex) locks.

Lock contention is typically described in the context of the internals of operating systems, but may also be a problem at a high-level such as application programming with concurrency primitives.

It describes a situation where a lock protects a critical section and multiple threads attempt to acquire the lock at the same time.

This is a problem because only one thread can acquire a lock, and all other threads must block, and wait for the lock to become available. This is an inefficient use of resources, as those threads could be executing and completing tasks in the application.

You can learn more about lock contention in this tutorial:

One approach to reducing contention for a lock is to retry getting the lock after a back-off interval and to increase this interval with the number of retries.

What is a retry with a back-off and how can we implement this in Python?

Run loops using all CPUs, download your FREE book to learn how.

What is Lock Retry With Back-Off?

One approach to reducing lock contention is to retry the lock with a back-off.

If a thread attempts to acquire a lock, rather than block until the lock becomes available, the thread may try to acquire the lock again after some interval of time, called a back-off interval.

Whenever a thread sees the lock has become free but fails to acquire it, it backs off before retrying.

— Page 154, The Art of Multiprocessor Programming, 2020.

As the number of attempts to acquire the lock are tried and fail, the back-off interval can be increased as a function of the number of attempts.

For example:

- Linear Back-Off: Back-off interval increases as a linear function of the number of attempts to acquire the lock.

- Exponential Back-Off: Back-off interval increases as an exponential function (e.g. doubles) of the number of attempts to acquire the lock.

An exponential back-off function that doubles for each failed attempt to acquire a lock is a popular approach used in concurrency programming, and in other domains for the broader problem of resource contention.

A good rule of thumb is that the larger the number of unsuccessful tries, the higher the likely contention, so the longer the thread should back off. To incorporate this rule, each time a thread tries and fails to get the lock, it doubles the expected back-off time, up to a fixed maximum.

— Page 154, The Art of Multiprocessor Programming, 2020.

The back-off interval may be seeded with a random initial interval to avoid multiple threads attempting to acquire the lock at the same time, in lock-step.

To ensure that competing threads do not fall into lockstep, each backing off and then trying again to acquire the lock at the same time, the thread backs off for a random duration.

— Page 154, The Art of Multiprocessor Programming, 2020.

Using a back-off allows a thread waiting on a contended lock to perform other tasks while waiting and is a type of busy waiting related to a spinlock.

Next, let’s take a closer look at busy waiting and spinlocks as they are related to retrying a lock with a back-off.

Lock Retry With Back-Off is a Spinlock

Retrying a lock with a back-off is a type of busy waiting called a spinlock.

Busy waiting, also called spinning, refers to a thread that repeatedly checks a condition.

It is referred to as “busy” or “spinning” because the thread continues to execute the same code, such as an if-statement within a while-loop, achieving a wait by executing code (e.g. keeping busy).

- Busy Wait: When a thread “waits” for a condition by repeatedly checking for the condition in a loop.

Busy waiting is typically undesirable in concurrent programming as the tight loop of checking a condition consumes CPU cycles unnecessarily, occupying a CPU core. As such, it is sometimes referred to as an anti-pattern of concurrent programming, a pattern to be avoided.

If you are new to busy waiting, you can learn more here:

A spinlock is a type of lock where a thread must perform a busy wait if it is already locked.

- Spinlock: Using a busy wait loop to acquire a lock.

Spinlocks are typically implemented at a low-level, such as in the underlying operating system. Nevertheless, we can implement a spinlock at a high-level in our program as a concurrency primitive.

A spinlock may be a desirable concurrency primitive that gives fine grained control over both how long a thread may wait for a lock (e.g. loop iterations or wait time) and what the thread is able to do while waiting for the lock.

If you are new to spinlocks, you can learn more here:

Retrying a lock with a back-off is a type of spinlock where the thread attempts to acquire the lock each iteration of the busy wait loop.

Each time the thread fails to acquire the lock, it will wait for a back-off interval that will increase as a function of the number of retries.

Now that we know how lock retrying with a back-off is related to busy-waiting and spinlocks, let’s look at how we might implement it in Python.

Free Python Threading Course

Download your FREE threading PDF cheat sheet and get BONUS access to my free 7-day crash course on the threading API.

Discover how to use the Python threading module including how to create and start new threads and how to use a mutex locks and semaphores

How to Implement a Lock Retry With Back-Off

In this section, we will develop a retry for mutex locks with a back-off interval that is a function of the number of retries.

Mutex Locks

Python provides a mutual exclusion (mutex) lock via the threading.Lock class.

A mutex lock can be used to ensure that only one thread at a time executes a critical section of code at a time, while all other threads trying to execute the same code must wait until the currently executing thread is finished with the critical section and releases the lock.

Python provides a mutual exclusion lock via the threading.Lock class.

An instance of the lock can be created and then acquired by threads before accessing a critical section, and released after the critical section.

For example:

|

1 2 3 4 5 6 7 8 9 |

... # create a lock lock = Lock() # acquire the lock lock.acquire() # critical section # ... # release the lock lock.release() |

You can learn more about mutex locks in the tutorial:

Lock Retry (Spinlocks)

Only one thread can have the lock at any time.

If locked, a thread attempting to acquire the lock will block until the lock is released by the thread currently holding the lock.

Instead of blocking until the lock is available, we can create a spinlock that will check the status of the lock each iteration, and if available, will acquire the lock and execute the critical section.

We can attempt to acquire the lock without blocking by setting the “blocking” argument to False. The function will return True if the lock was acquired or False otherwise. Therefore, we can attempt to acquire the lock in an if-statement.

For example:

|

1 2 3 4 |

... # attempt to acquire the lock if lock.acquire(blocking=False): # ... |

We can use this in a busy loop that will execute the critical section and break the loop.

For example:

|

1 2 3 4 5 6 7 8 9 10 11 |

... # busy wait loop for a spinlock while True: # attempt to acquire the lock if lock.acquire(blocking=False): # execute the critical section # ... # release the lock lock.release() # exit the spinlock break |

Each iteration of the loop, we attempt to acquire the lock without blocking. If acquired, the loop will execute the critical section, release the lock then break the loop.

Lock Retry With Fixed Timeout (Blocking Spinlocks)

A limitation of the spinlock is the increased computational burden of executing a tight loop repeatedly.

The computational burden of the busy wait loop can be lessened by allowing the thread to block.

This can be achieved by having the thread call the time.sleep() function to block for a fixed interval if the lock cannot be acquired.

For example:

|

1 2 3 4 5 6 7 |

... # attempt to acquire the lock with a timeout if lock.acquire(blocking=False): # ... else: # block for a fixed interval time.sleep(1.0) |

A problem with this approach is that each thread will attempt to retry the lock after the same time interval. This is a problem if many threads are started at the same time and attempt to execute this same code block.

Another problem is that the longer that the lock is unavailable, the higher the contention is for the lock. Therefore, it would be wise for the thread to try less often as more time passes.

Next, we can look at how to transform the fixed waiting interval between retries to a random back-off interval that increases as a function of the number of retries.

Lock Retry with Linear Back-Off

We can back-off a linear function of the number of retries.

This can be achieved by seeding the back-off interval with a random fraction of a second between 0 and 1.

This can be achieved with the random.random() function. We can also keep track of the number of retries, starting with zero.

|

1 2 3 4 5 |

... # initialize the number of attempts attempts = 0 # initialize the back-off interval initial_wait = random() |

The initial back-off may be any interval we like, such as between 0 and 10 milliseconds, 0 and 100 milliseconds or 0 and 1,000 milliseconds (1 second).

We use a random value to avoid all threads attempting to retry a lock at the same time in lock-step.

The back-off interval can be calculated simply as the number of retries multiplied by the randomly seeded back-off interval.

- Back-Off = Initial Interval * Number of Retries

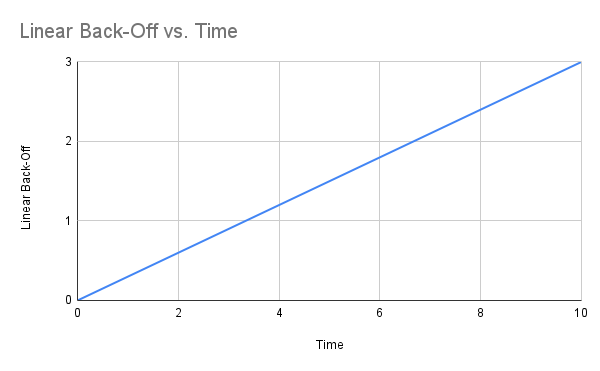

For example, if the random back-off interval was 0.3 seconds, then the linear back-off interval between retries would be calculated as follows for the first ten retries:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

Time Linear Back-Off 0 0 1 0.3 2 0.6 3 0.9 4 1.2 5 1.5 6 1.8 7 2.1 8 2.4 9 2.7 10 3 |

We can make this clear with a plot of the number of retries (time) vs the back-off interval.

This back-off interval can be calculated each iteration of the busy wait loop and used in the call to time.sleep().

For example:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

... # busy wait loop for a spinlock while True: # attempt to acquire the lock if lock.acquire(blocking=False): # execute the critical section # ... # release the lock lock.release() # exit the spinlock break else: # calculate back-off interval wait = attempts * initial_wait # block before next lock retry time.sleep(wait) |

Lock Retry with Exponential Back-Off

A popular retry approach is to calculate the back-off time as an exponential function of the number of retries.

A common exponential function is that we can double the length of the back-off each retry.

This can be achieved by calculating the square of the number of retries and then multiplying this value by the randomly seeded wait interval.

For example:

- Back-Off = Initial Interval * (Number of Retries)^2

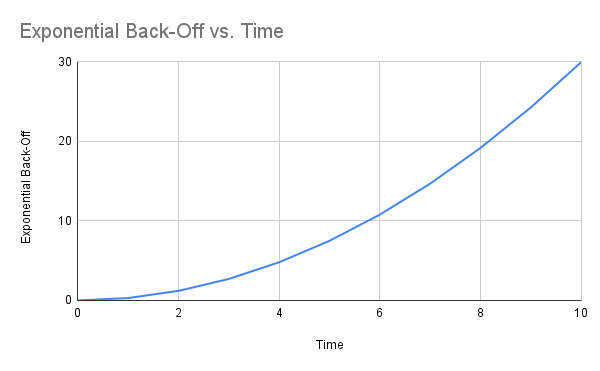

For example, if the random back-off interval was 0.3 seconds, then the exponentially doubling back-off interval between retries would be calculated as follows for the first ten retries:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

Time Exponential Back-Off 0 0 1 0.3 2 1.2 3 2.7 4 4.8 5 7.5 6 10.8 7 14.7 8 19.2 9 24.3 10 30 |

We can make this clear with a plot of the number of retries (time) vs the back-off interval.

This back-off interval can be calculated each iteration of the busy wait loop and used in the call to time.sleep().

For example:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

... # busy wait loop for a spinlock while True: # attempt to acquire the lock if lock.acquire(blocking=False): # execute the critical section # ... # release the lock lock.release() # exit the spinlock break else: # calculate back-off interval wait = attempts**2.0 * initial_wait # block before next lock retry time.sleep(wait) |

Now that we know how to retry a lock with a random and increasing back-off interval, let’s look at some worked examples.

Overwhelmed by the python concurrency APIs?

Find relief, download my FREE Python Concurrency Mind Maps

Example of Lock Contention

Before we explore retrying a lock with a back-off, let’s first develop a simple example with lock contention.

In this example, we will have a task that contains a critical section protected by a mutex lock. The critical section blocks for some random fraction of five seconds then reports the thread identifier. We will start three threads that all attempt to execute the task, contend for the same lock and wait their turn.

First, we can define a task function that takes the shared lock and a unique integer to identify the thread, it acquires the lock, blocks for a fraction of five seconds then reports a message.

The task() function below implements this.

|

1 2 3 4 5 6 7 8 |

# task executed in a new thread def task(lock, identifier): # acquire the lock lock.acquire() # critical section sleep(random() * 5) print(f'Thread {identifier} done') lock.release() |

The main thread can then create the shared lock.

|

1 2 3 |

... # create the shared lock lock = Lock() |

We can then create three threading.Thread instances to execute our task() function and provide a unique integer for each thread.

This can be achieved in a list comprehension.

|

1 2 3 |

... # create threads threads = [Thread(target=task, args=(lock,i)) for i in range(3)] |

The main thread can then start all three threads, then block until all threads terminate.

|

1 2 3 4 5 6 7 |

... # start the threads for thread in threads: thread.start() # wait for threads to terminate for thread in threads: thread.join() |

Tying this together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

# SuperFastPython.com # example of lock contention from random import random from time import sleep from threading import Thread from threading import Lock # task executed in a new thread def task(lock, identifier): # acquire the lock lock.acquire() # critical section sleep(random() * 5) print(f'Thread {identifier} done') lock.release() # create the shared lock lock = Lock() # create threads threads = [Thread(target=task, args=(lock,i)) for i in range(3)] # start the threads for thread in threads: thread.start() # wait for threads to terminate for thread in threads: thread.join() |

Running the example first creates the shared lock then creates and starts all three threads.

Each thread contends for the lock and blocks waiting for its turn to acquire the lock, block and report a successful message.

|

1 2 3 |

Thread 0 done Thread 1 done Thread 2 done |

Next, let’s look at how we might update the example to retry the lock in a loop.

Lock Retry With a Spinlock

We can attempt to acquire the lock in a loop without blocking.

This is called a spinlock and allows the thread to perform other activities while waiting for the lock to become available.

You can learn more about spinlocks in the tutorial:

We can update the previous example so that threads use a spinlock while waiting for a thread.

This requires first that we loop forever.

|

1 2 3 4 |

... # loop until lock acquired and task complete while True: # ... |

Next, we can call the acquire() function with the “blocking” argument set to False so that the call does not block. We can then check the status of the return to see if the lock was acquired.

|

1 2 3 4 |

... # attempt to acquire the lock if lock.acquire(blocking=False): # ... |

If the lock was acquired, we can execute the critical section, release the lock and break out of the busy wait loop of the spinlock.

|

1 2 3 4 5 6 7 |

... # critical section sleep(random() * 5) print(f'Thread {identifier} done') # release lock lock.release() break |

Otherwise, the loop repeats and the thread attempts to acquire the lock again.

Tying this together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

# SuperFastPython.com # example of lock contention with spin lock from random import random from time import sleep from threading import Thread from threading import Lock # task executed in a new thread def task(lock, identifier): # loop until lock acquired and task complete while True: # attempt to acquire the lock if lock.acquire(blocking=False): # critical section sleep(random() * 5) print(f'Thread {identifier} done') # release lock lock.release() break # create the shared lock lock = Lock() # create threads threads = [Thread(target=task, args=(lock,i)) for i in range(3)] # start the threads for thread in threads: thread.start() # wait for threads to terminate for thread in threads: thread.join() |

Running the example first creates the shared lock then creates and starts all three threads.

Each thread contends for the lock. One thread at a time will acquire it and execute the critical section.

Waiting threads will no longer block. Instead they execute their busy wait loop as fast as possible, re-trying the loop each iteration.

|

1 2 3 |

Thread 0 done Thread 2 done Thread 1 done |

Using a spinlock gives the benefit of allowing threads to perform other tasks while waiting, but is computationally wasteful. It also does not reduce the lock contention, as all threads are retrying the lock all the time simultaneously.

Next, let’s look at how we can add some waiting to the spin-lock.

Lock Retry With A Random Wait

The spinlock can be updated to block for a limited amount of time for the lock to become available.

This can be achieved by having the thread not attempt to acquire the lock for some interval. A fixed timeout could be used, such as 100 milliseconds.

The lock could spin until the time limit is met, then attempt to acquire the lock again. Alternatively, the spinlock could block until the interval has elapsed. This could be used with a call to time.sleep() if the lock is not acquired.

A problem with this approach is that it would mean that all threads that execute the function at the same time will retry the lock at the same time, in lock-step.

An alternative approach could be for each thread to choose a random wait interval before the spinlock and use this interval for each retry.

For example:

|

1 2 3 |

... # choose a random wait interval wait_interval = random() |

This could be used each time the lock is retried.

For example:

|

1 2 3 4 5 6 7 |

... # attempt to acquire the lock if lock.acquire(blocking=False): # ... else: # block for an interval sleep(wait_interval) |

The example of using a spinlock in the previous section can be updated to use this approach.

The update task() function with these changes is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

# task executed in a new thread def task(lock, identifier): # choose a random wait interval wait_interval = random() # loop until lock acquired and task complete while True: # attempt to acquire the lock if lock.acquire(blocking=False): # critical section sleep(random() * 5) print(f'Thread {identifier} done') # release lock lock.release() break else: # block for an interval sleep(wait_interval) |

Tying this together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

# SuperFastPython.com # example of lock contention with spinlock that blocks from random import random from time import sleep from threading import Thread from threading import Lock # task executed in a new thread def task(lock, identifier): # choose a random wait interval wait_interval = random() # loop until lock acquired and task complete while True: # attempt to acquire the lock if lock.acquire(blocking=False): # critical section sleep(random() * 5) print(f'Thread {identifier} done') # release lock lock.release() break else: # block for an interval sleep(wait_interval) # create the shared lock lock = Lock() # create threads threads = [Thread(target=task, args=(lock,i)) for i in range(3)] # start the threads for thread in threads: thread.start() # wait for threads to terminate for thread in threads: thread.join() |

Running the example first creates the shared lock then creates and starts all three threads.

Each thread contends for the lock. One thread at a time will acquire it and execute the critical section.

Waiting threads will block for a fraction of a second while waiting for the lock to become available. Each thread will use its own unique wait interval.

This will reduce the simultaneous contention on the lock. The use of a random wait interval makes it unlikely for the threads to fall into lock-step when trying the lock.

|

1 2 3 |

Thread 0 done Thread 1 done Thread 2 done |

The longer the lock remains unavailable, the more likely that the contention for the lock is high.

As such, it can be a good idea to retry the lock less often in proportion to the number of retries that have been made.

Next, we will look at how to use a linear back-off before retrying the lock.

Lock Retry With Linear Back-Off

We can use a wait period that increases as a linear function of the number of failed retries of the lock.

For example, we can calculate the wait interval each iteration of the spinlock busy wait loop as the number of retries multiplied by the initial random wait interval.

This requires first that the number of lock retry attempts be recorded, initialized to zero alongside the initial randomly chosen wait interval before the start of the spinlock.

|

1 2 3 4 5 |

... # initialize the number of retries of the lock attempts = 0 # initialize the initial wait interval wait_interval = random() |

Next, the wait interval can be calculated as a linear function of the number of failed lock retries.

|

1 2 3 |

... # calculate wait interval as a linear function of the number of retries wait = attempts * wait_interval |

This interval can then be used to prevent the thread from attempting to acquire the lock again.

The thread could spin for this interval, or could block with a call to time.sleep().

For example

|

1 2 3 |

... # block the thread before retrying sleep(wait) |

Finally, the number of failed attempts needs to be incremented.

|

1 2 3 |

... # increase the number of attempts attempts += 1 |

We can update the spinlock with the blocking wait from the previous section to use this linear back-off.

The updated task() function with these changes is listed below.

In addition, we also report the status of each thread while waiting to get an idea of the number of lock retries attempted by each thread.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# task executed in a new thread def task(lock, identifier): # initialize the number of retries of the lock attempts = 0 # initialize the initial wait interval wait_interval = random() # loop until lock acquired and task complete while True: # attempt to acquire the lock if lock.acquire(blocking=False): # critical section sleep(random() * 5) print(f'Thread {identifier} done') # release lock lock.release() break else: # calculate wait interval as a linear function of the number of retries wait = attempts * wait_interval # report status print(f'.thread {identifier} waiting {wait:.2f} sec after {attempts} attempts') # block the thread before retrying sleep(wait) # increase the number of attempts attempts += 1 |

Tying this together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 |

# SuperFastPython.com # example of lock contention with linear back-off from random import random from time import sleep from threading import Thread from threading import Lock # task executed in a new thread def task(lock, identifier): # initialize the number of retries of the lock attempts = 0 # initialize the initial wait interval wait_interval = random() # loop until lock acquired and task complete while True: # attempt to acquire the lock if lock.acquire(blocking=False): # critical section sleep(random() * 5) print(f'Thread {identifier} done') # release lock lock.release() break else: # calculate wait interval as a linear function of the number of retries wait = attempts * wait_interval # report status print(f'.thread {identifier} waiting {wait:.2f} sec after {attempts} attempts') # block the thread before retrying sleep(wait) # increase the number of attempts attempts += 1 # create the shared lock lock = Lock() # create threads threads = [Thread(target=task, args=(lock,i)) for i in range(3)] # start the threads for thread in threads: thread.start() # wait for threads to terminate for thread in threads: thread.join() |

Running the example first creates the shared lock then creates and starts all three threads.

Each thread contends for the lock. One thread at a time will acquire it and execute the critical section.

Waiting threads execute the spinlock. Each iteration they calculate a wait period as a linear function of the number of failed attempts acquiring the lock and the randomly chosen initial wait interval.

The longer each thread waits for the lock, the longer it will wait in the future.

The first attempt, each waiting thread will not block. The second attempt, each thread will block for the randomly chosen wait interval number of seconds. The third attempt, each thread will block for 2 times the initial wait interval, then 3 times, then four times, and so on.

A sample output from the program is listed below. Your results will differ given the use of random numbers.

|

1 2 3 4 5 6 7 8 9 10 |

.thread 1 waiting 0.00 sec after 0 attempts .thread 1 waiting 0.56 sec after 1 attempts .thread 2 waiting 0.00 sec after 0 attempts .thread 2 waiting 0.61 sec after 1 attempts .thread 1 waiting 1.13 sec after 2 attempts .thread 2 waiting 1.22 sec after 2 attempts Thread 0 done .thread 2 waiting 1.84 sec after 3 attempts Thread 1 done Thread 2 done |

The more attempts a thread makes at acquiring the lock, the higher the contention is for that lock. Therefore, back-off functions that grow faster than a linear amount of the number of retries may be preferred.

Next, let’s look at how we might use an exponential back-off that doubles with each failed retry.

Lock Retry With Exponential Back-Off

An exponential function is a function that grows as a function of the input and the amount it grows also grows.

We can use an exponential function of the number of failed retries to calculate the wait time for the lock. This means that block for longer and longer intervals while waiting for the lock.

The exponential back-off can be calculated as the square of the number of failed retry attempts multiplied by the initial random wait interval.

For example:

|

1 2 3 |

... # calculate wait interval as a exponential function of the number of retries wait = attempts**2.0 * wait_interval |

We can update the retry with linear back-off from the previous section to use the exponential back-off function.

The updated task() function with this change is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# task executed in a new thread def task(lock, identifier): # initialize the number of retries of the lock attempts = 0 # initialize the initial wait interval wait_interval = random() # loop until lock acquired and task complete while True: # attempt to acquire the lock if lock.acquire(blocking=False): # critical section sleep(random() * 5) print(f'Thread {identifier} done') # release lock lock.release() break else: # calculate wait interval as a exponential function of the number of retries wait = attempts**2.0 * wait_interval # report status print(f'.thread {identifier} waiting {wait:.2f} sec after {attempts} attempts') # block the thread before retrying sleep(wait) # increase the number of attempts attempts += 1 |

Tying this together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 |

# SuperFastPython.com # example of lock contention with exponential back-off from random import random from time import sleep from threading import Thread from threading import Lock # task executed in a new thread def task(lock, identifier): # initialize the number of retries of the lock attempts = 0 # initialize the initial wait interval wait_interval = random() # loop until lock acquired and task complete while True: # attempt to acquire the lock if lock.acquire(blocking=False): # critical section sleep(random() * 5) print(f'Thread {identifier} done') # release lock lock.release() break else: # calculate wait interval as a exponential function of the number of retries wait = attempts**2.0 * wait_interval # report status print(f'.thread {identifier} waiting {wait:.2f} sec after {attempts} attempts') # block the thread before retrying sleep(wait) # increase the number of attempts attempts += 1 # create the shared lock lock = Lock() # create threads threads = [Thread(target=task, args=(lock,i)) for i in range(3)] # start the threads for thread in threads: thread.start() # wait for threads to terminate for thread in threads: thread.join() |

Running the example first creates the shared lock then creates and starts all three threads.

Each thread contends for the lock. One thread at a time will acquire it and execute the critical section.

Waiting threads execute the spinlock. They attempt to acquire the lock without blocking.

If the attempt fails, a wait interval is calculated as an exponential of the number of failed retries and each thread’s randomly chosen initial wait interval.

The longer each thread waits for the lock, the longer it will wait in the future, and the longer this goes on, the more the function will grow.

The first attempt, each waiting thread will not block. The second attempt, each thread will block for the randomly chosen wait interval number of seconds. The third attempt, each thread will block for 2-times the initial wait, then 4-times, then 8-times and so on.

A sample output from the program is listed below. Your results will differ given the use of random numbers.

|

1 2 3 4 5 6 7 8 9 10 |

.thread 1 waiting 0.00 sec after 0 attempts .thread 1 waiting 0.52 sec after 1 attempts .thread 2 waiting 0.00 sec after 0 attempts .thread 2 waiting 0.45 sec after 1 attempts .thread 2 waiting 1.80 sec after 2 attempts .thread 1 waiting 2.07 sec after 2 attempts Thread 0 done .thread 1 waiting 4.66 sec after 3 attempts Thread 2 done Thread 1 done |

Further Reading

This section provides additional resources that you may find helpful.

Python Threading Books

- Python Threading Jump-Start, Jason Brownlee (my book!)

- Threading API Interview Questions

- Threading Module API Cheat Sheet

I also recommend specific chapters in the following books:

- Python Cookbook, David Beazley and Brian Jones, 2013.

- See: Chapter 12: Concurrency

- Effective Python, Brett Slatkin, 2019.

- See: Chapter 7: Concurrency and Parallelism

- Python in a Nutshell, Alex Martelli, et al., 2017.

- See: Chapter: 14: Threads and Processes

Guides

- Python Threading: The Complete Guide

- Python ThreadPoolExecutor: The Complete Guide

- Python ThreadPool: The Complete Guide

APIs

References

Takeaways

You now know how to retry a lock with an exponential back-off.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Leave a Reply