Don’t Leave

Performance to Chance

Accurately measure execution time

and know for sure!

Python can be slow, milliseconds count!

Benchmarking is the path to faster Python.

- Measure with the time and timeit modules.

- Code less with helper functions and classes.

- Make confident decisions with robust results.

Don’t work in the dark. Squeeze every drop of efficiency from your Python code, today!

Heard enough? Jump to the book,

Otherwise read on…

Benchmarking REALLY Matters

(…if performance matters to you)

Benchmarking is critical if you care about the performance of your code.

Benchmarking is not just a technical tool; it’s the heart of software craftsmanship.

It’s the systematic process that ensures your code operates efficiently and that you make informed decisions to improve it.

What is Benchmarking?

- At its core, benchmarking means measuring the execution time and resource usage of your code.

- It’s a powerful technique that reveals bottlenecks, highlights areas for enhancement and guarantees optimal performance.

Benchmarking isn’t just another step in the software development process; it’s the craft that transforms your code into a high-performance solution.

It can be the difference that elevates your software from functional to exceptional.

Benchmarking turns code optimization

from an art into a science!

Benchmarking provides you with data-driven insights.

By collecting and analyzing performance metrics, you can spot areas of improvement, optimize your code, and create faster, more resource-efficient software.

But benchmarking isn’t just about recording numbers; it’s a meticulous discipline that demands precision, controlled conditions, and deep project understanding.

As a software engineer, it’s your secret weapon.

Why Benchmark Execution Time?

There are many aspects of a program we could benchmark, but the most important factor is execution time.

Benchmarking code execution time is a fundamental practice for delivering efficient and responsive programs.

The main reason we benchmark execution time is to improve performance.

- Performance Boost: Benchmarking pinpoints bottlenecks and critical areas for code optimization by analyzing execution times. This precision guides focused optimization efforts

We measure execution time so that we can improve it!

This typically involves a baseline measurement, then a measurement of performance after each change to confirm we moved performance in the right direction.

Other reasons we may want to benchmark execution time include:

- User-Centric Code: Meeting performance requirements is vital for a great user experience. Benchmarking guarantees efficiency and consistency, resulting in high-quality applications.

- Regression Detection: Benchmarking swiftly spots performance changes with new code, ensuring prompt attention to improvements or issues.

Not benchmarking execution time can have a high cost.

The Huge Costs

If You Ignore Execution Time!

Neglecting the benchmarking of code execution time can have several significant consequences, which can impact both the development process and the quality of the software being developed.

Some of the key consequences include:

- Performance Issues: Without proper benchmarking, we may inadvertently release software that performs poorly. This can lead to user dissatisfaction, slow response times, and a negative user experience.

- Resource Inefficiency: Unoptimized code can consume unnecessary system resources, such as excessive CPU usage or memory usage. This inefficiency can result in higher operational costs and resource contention with other applications.

- Scalability Problems: Neglecting benchmarking may lead to a lack of understanding about how the software scales with increased workloads. As a result, the software may not be able to handle growing user demands effectively.

- Poor User Experience: Sluggish and unresponsive software can frustrate users and drive them away. This can have negative implications for user retention and customer satisfaction.

- Long-Term Costs: Ignoring benchmarking can lead to long-term costs associated with fixing performance issues, scaling the system, and addressing user complaints. Early performance optimization is often more cost-effective.

To avoid these consequences, it’s crucial to take code benchmarking seriously as an integral part of the software development process.

How Can You Benchmark Code

Execution Time in Python?

There are two main approaches to benchmarking execution time in Python.

They are:

- The time module

- The timeit module

Both of these modules are provided in the standard library.

This means that there is nothing extra to install.

No third-party libraries!

Benchmark with the “time” Module

The time module in Python provides functions for working with clocks and time.

There are five main ways to retrieve the current time using the time module, they are:

- Use time.time() <= Everyone uses this, and that’s a big problem!

- Use time.perf_counter()

- Use time.monotonic()

- Use time.process_time()

- Use time.thread_time()

Each of these functions returns a time in seconds.

Each function has different qualities and most use different underlying clocks.

Each can be used for benchmarking Python code.

Most developers measure time the WRONG way!

The problem is, that each function has a specific use case, and, more importantly: not all should be used for benchmarking!

For example, did you know that the most widely used function for benchmarking is the time.time() function?

This is a bad idea because:

- The time.time() function returns the time from the system clock and the system clock can be changed at any time, such as by the user or automatically via synchronization with a time server.

- The system clock that is accessed by the time.time() function has a lower precision than some of the other clocks on the system, leading to lower resolution benchmarking results, especially for very short-duration code.

We should be using the time.perf_counter() function instead, it was introduced in Python version 3.3 specifically to overcome the limitations of the time.time() function.

Benchmark with the “timeit” Module

The timeit module was specifically developed for benchmarking.

Importantly the timeit module encodes a number of best practices for benchmarking, including:

- Timing code using time.perf_counter(), for high-precision.

- Executing target code many times by default (many samples), to reduce statistical noise.

- Disabling the Python garbage collector, to reduce the variance in the measurements.

- Providing a controlled and well-defined scope for benchmarked code, to reduce unwanted side-effects.

The module provides two interfaces for benchmarking, they are:

- API interface for benchmarking directly in our programs

- Command-line interface for ad hoc benchmarking code snippets.

Many developers get confused and give up on timeit!

The timeit module is intended to benchmark single statements.

This is typically the first stumbling block encountered by developers new to using the module.

They try to benchmark a function or a whole program and get frustrated.

The reason is that this is not the intended use case for the timeit module.

The second stumbling block is the times reported by timeit.

The benchmark time reported is typically the sum of multiple runs, not the best or average time.

This is great for a reliable comparison between snippets of Python code that achieve the same outcome, but not helpful when sharing benchmark results with stakeholders who want an answer to the simple question:

How long does it take to run?

The timeit module is a helpful tool, but not the solution to all of our benchmarking needs.

You Will Need To Go A Step Further

With Advanced Benchmarking

Once we know how to measure code execution time accurately, there are other concerns.

Reusable Benchmarking Helpers

We can’t afford to have bugs in the measurement and calculation of benchmark times.

We also cannot afford to clutter our programs with benchmarking code.

Instead, we can develop reusable helper benchmarking functions and classes.

This includes things like:

- Helper functions

- Stopwatch classes

- Benchmarking context manager

- Function decorators

These helpers can be written once using correct code and best practices, then reused on all of our projects going forward.

Benchmarking Best Practices

Benchmarking results are easy to measure but hard to measure accurately.

As we have seen, we can easily use the wrong timing function to gather the results.

But it’s worse than that.

Each time we perform a benchmark, we will get a different result.

This is due to the statistical variability in measuring the execution time on a computer system that is running hundreds or even thousands of small programs in the background.

We have to use simple statistical tools to overcome the natural randomness in benchmark results, such as repeating benchmarks many times and reporting an average benchmark score.

Once we have a reliable measure of the execution time, we must carefully choose how to present it to stakeholders for decision-making.

This might include the careful selection of the units of measure to be consistent and meaningful, e.g. seconds, milliseconds, microseconds, nanoseconds, etc.

It may also include the careful selection of the numerical precision of results to exclude unnecessary details that may confuse decision-making.

Benchmarking Asyncio Programs

Asyncio programs are different from regular Python programs.

They are comprised of a tangle of coroutines and function calls that may suspend at any time and await the execution of one or more tasks.

We can manually benchmark asyncio programs using function calls from the time module or the timeit module.

We may also use an additional clock for benchmarking provided by the asyncio event loop itself called “loop time“.

Additionally, any helper functions or classes we may have developed for benchmarking regular Python code won’t work for asyncio programs.

They will result in a syntax error.

Instead, asyncio-specific helper tools must be developed to benchmark asyncio programs, such as:

- Helper benchmark coroutines

- Asynchronous context managers

- Coroutine decorators

Asyncio is the programming paradigm for modern Python web frameworks and we must be able to benchmark our asyncio programs effectively.

Take Code Benchmarking Seriously!

Effective benchmarking does not “just happen”, it requires knowledge.

- You must know which functions in the time module to use for benchmarking, and which to avoid (and why)

- You must know how to develop helper functions, stopwatch classes, benchmark context managers, and benchmark function decorators to benchmark cleanly and consistently.

- You must know how to benchmark differently in asyncio programs with coroutines and asynchronous execution.

- You must know the best practices such as how to repeat benchmarks and report summary statistics (and why).

- You must know when to use the timeit module for benchmarking and how to its Python API and command line interface.

It’s easy to gather benchmark results that are wrong or misleading.

Any decisions you make with these results will also be suspect.

There is a lot to know about how to benchmark Python code correctly.

Don’t worry, I’ve done the work for you.

I wrote this book to show you how.

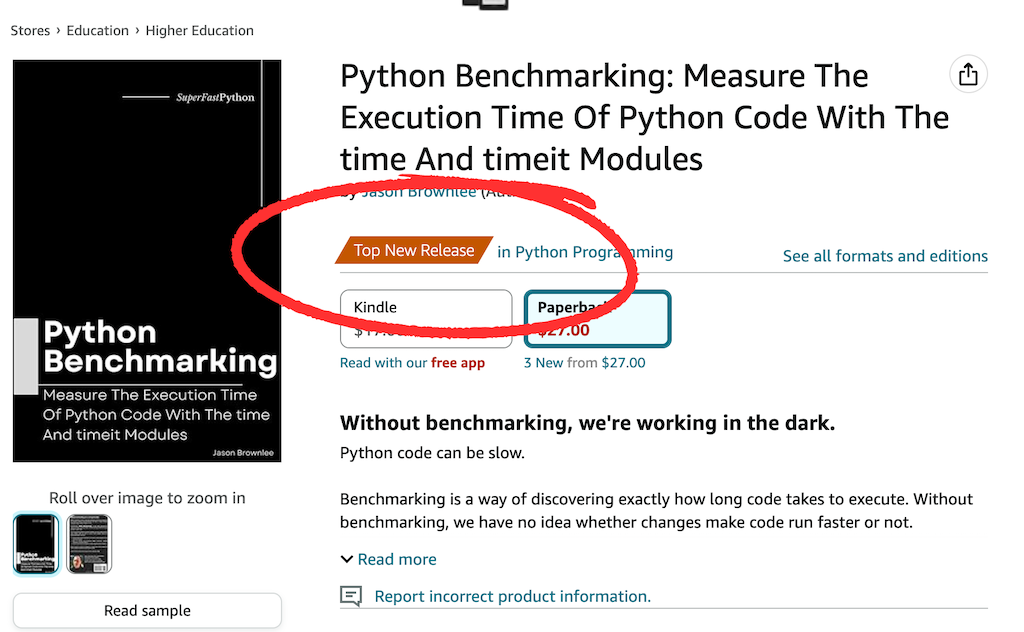

Introducing:

“Python Benchmarking”

Measure The Execution Time Of Python Code With The time And timeit Modules

“Python Benchmarking” is my new book that will teach you how to accurately measure the execution time of your programs, from scratch.

This book distills only what you need to know to get started and be effective with Python Benchmarking, super fast.

It’s exactly how I would teach you benchmarking if we were sitting together, pair programming.

Technical Details:

- 25 tutorials taught by example with full working code listings.

- 56 .py code files included that you can use as templates and extend.

- 259 pages for on-screen reading open next to your IDE or editor.

- 2 formats (PDF and EPUB) for screen, tablet, and Kindle reading.

- 1.2 megabyte .zip download that contains the ebook and code.

Everything you need to get started, then get really good at Python Benchmarking, all in one book.

“Python Benchmarking” will lead you on the path from a Python developer frustrated with code to a developer who can confidently measure execution time and know that code is faster.

- No fluff.

- Just concepts and code.

- With full working examples.

The book is divided into 25 practical tutorials.

The idea is you can read and work through one or two tutorials per day, and become capable in about one month.

It is the shortest and most effective path that I know of transforming you into a confident benchmarking Python developer.

Choose Your Package:

BOOK

You get the book:

- Python Benchmarking

PDF and EPUB formats

Includes all source code files

BOOKSHELF

BEST VALUE

You get everything (15+ books):

- Threading Jump-Start

- Multiprocessing Jump-Start

- Asyncio Jump-Start

- ThreadPool Jump-Start

- Pool Jump-Start

- ThreadPoolExecutor Jump-Start

- ProcessPoolExecutor Jump-Start

- Threading Interview Questions

- Multiprocessing Interview Questions

- Asyncio Interview Questions

- Executors Interview Questions

- Concurrent File I/O

- Concurrent NumPy

- Python Benchmarking

- Python Asyncio Mastery

Bonus, you also get:

- Concurrent For Loops Guide

- API Mind Maps (4)

- API Cheat Sheets (7)

That is $210 of Value!

(you get a 10.95% discount)

All prices are in USD.

(also get the book from Amazon, GumRoad, and GooglePlay stores)

You Get 25 Laser-Focused Tutorials

This book is designed to bring you up-to-speed with benchmarking as fast as possible.

As such, it is not exhaustive. There are many topics that are interesting or helpful but are not on the critical path to getting you productive fast.

This book is divided into 7 parts, they are:

- Part I: Background. Tutorials on getting up to speed with code benchmarking.

- Part II: Benchmarking With time. Tutorials on how to benchmark using functions in the time module.

- Part III: Benchmarking Best Practices. Tutorials on the best practices and tips to consider when benchmarking code.

- Part IV: Benchmarking Helpers. Tutorials on how to develop helper functions and objects to make benchmarking simpler.

- Part V: Benchmarking Asyncio. Tutorials on how to benchmark coroutines and asyncio programs.

- Part VI: Benchmarking With timeit. Tutorials on how to benchmark using the timeit module.

- Part VII: Other Benchmarking. Tutorials on related techniques such as profiling and command line tools.

The book is further divided into 25 tutorials across the four parts, they are:

Part I: Background:

- Tutorial 01: Introduction.

- Tutorial 02: Benchmarking Python.

Part II: Benchmarking With time:

- Tutorial 03: Benchmarking With time.time()

- Tutorial 04: Benchmarking With time.monotonic()

- Tutorial 05: Benchmarking With time.perf_counter()

- Tutorial 06: Benchmarking With time.thread_time()

- Tutorial 07: Benchmarking With time.process_time()

- Tutorial 08: Comparing time Module Functions

Part III: Benchmarking Best Practices:

- Tutorial 09: Benchmark Metrics

- Tutorial 10: Benchmark Repetition

- Tutorial 11: Benchmark Reporting

Part IV: Benchmarking Helpers:

- Tutorial 12: Benchmark Helper Function

- Tutorial 13: Benchmark Stopwatch Class

- Tutorial 14: Benchmark Context Manager

- Tutorial 15: Benchmark Function Decorator

Part V: Benchmarking Asyncio:

- Tutorial 16: Gentle Introduction to Asyncio

- Tutorial 17: Benchmarking Asyncio With loop.time()

- Tutorial 18: Benchmark Helper Coroutine

- Tutorial 19: Benchmark Asynchronous Context Manager

- Tutorial 20: Benchmark Coroutine Decorator

Part VI: Benchmarking With timeit:

- Tutorial 21: Benchmarking With The timeit Module

- Tutorial 22: Benchmarking With timeit.timeit()

- Tutorial 23: Benchmarking With The timeit Command Line

Part VII: Other Benchmarking:

- Tutorial 24: Profile Python Code

- Tutorial 25: Benchmarking With The time Command

Next, let’s take a closer look at how the tutorials are structured.

Highly structured lessons on how you can get results

This book teaches Python benchmarking by example.

Each lesson has a specific learning outcome and is designed to be completed in less than one hour.

Each lesson is also designed to be self-contained so that you can read the lessons out of order if you choose, such as dipping into topics in the future to solve specific programming problems.

The lessons were written with some intentional repetition of key APIs and concepts. These gentle reminders are designed to help embed the common usage patterns in your mind so that they become second nature.

We Python developers learn best from real and working code examples.

Next, let’s learn more about the code examples provided in this book.

Your Learning Outcomes

No More Working In The Dark!

This book will transform you into a Python developer that can benchmark Python code on your projects.

1. You will confidently use functions from the time module for benchmarking, including:

- How to benchmark statements, functions, and programs using the 5 functions for measuring time in the time module.

- How to know when and why to use functions like time.perf_counter() and time.monotonic() over time.time().

- How to know when and why to use functions like time.thread_time() and time.process_time().

2. You will confidently know benchmarking best practices and when to use them, including:

- How to calculate and report benchmark results in terms of difference and speedup.

- How to and why to repeat benchmark tests and report summary statistics like the average.

- How to consider the precision and units of measure of reported benchmark results.

3. You will confidently develop convenient benchmarking tools, including:

- How to develop a custom benchmarking helper function and stopwatch class.

- How to develop a custom benchmarking context manager.

- How to develop a custom benchmarking function decorator.

4. You will confidently know how to benchmark asyncio programs and develop convenient async-specific benchmarking tools, including:

- How to benchmark asyncio programs using the event loop timer.

- How to develop a custom benchmarking coroutine.

- How to develop a custom benchmarking asynchronous context manager and coroutine decorator.

5. You will confidently know how to benchmark snippets using the timeit module, including:

- How to benchmark statements and functions using the timeit Python API.

- How to benchmark snippets of code using the timeit command line interface.

6. You will confidently know how to use other benchmarking tools, including:

- How to profile Python programs with the built-in profiler and know the relationship between benchmarking and profiling.

- How to benchmark the execution time of Python programs unobtrusively using the time Unix command.

You will learn from code examples, not pages and pages of fluff.

Get your copy now:

100% Money-Back Guarantee

(no questions asked)

I want you to actually learn Python Benchmarking so well that you can confidently use it on current and future projects.

I designed my book to read just like I’m sitting beside you, showing you how.

I want you to be happy. I want you to win!

I stand behind all of my materials. I know they get results and I’m proud of them.

Nevertheless, if you decide that my books are not a good fit for you, I’ll understand.

I offer a 100% money-back guarantee, no questions asked.

To get a refund, contact me with your purchase name and email address.

Frequently Asked Questions

This section covers some frequently asked questions.

If you have any questions. Contact me directly. Any time about anything. I will do my best to help.

What are your prerequisites?

This book is designed for Python developers who want to discover how to benchmark Python code.

Specifically, this book is for:

- Developers that can write simple Python programs.

- Developers that need better performance from current or future Python programs.

This book does not require that you are an expert in the Python programming language, profiling, or benchmarking.

Specifically:

- You do not need to be an expert Python developer.

- You do not need to be an expert in code benchmarking.

- You do not need to be an expert at performance optimization.

What version of Python do you need?

All code examples use Python 3.

Python 3.11+ to be exact.

Python 2.7 is not supported because it reached its “end of life” in 2020.

Are there code examples?

Yes.

There are 56 .py code files.

Most lessons have many complete, standalone, and fully working code examples.

The book is provided in a .zip file that includes a src/ directory containing all source code files used in the book.

How long will the book take you to finish?

Work at your own pace.

I recommend about one tutorial per day, over 25 days (1 month).

There’s no rush and I recommend that you take your time.

This book is designed to be read linearly from start to finish, guiding you from being a Python developer at the start of the book to being a Python developer that can confidently benchmark Python code by the end of the book.

In order to avoid overload, I recommend completing one or two tutorials per day, such as in the evening or during your lunch break. This will allow you to complete the transformation in about one month.

I recommend maintaining a directory with all of the code you type from the tutorials in the book. This will allow you to use the directory as your own private code library, allowing you to copy-paste code into your projects in the future.

I recommend trying to adapt and extend the examples in the tutorials. Play with them. Break them. This will help you learn more about how the API works and why we follow specific usage patterns.

What format is the book?

You can read the book on your screen, next to your editor.

You can also read the book on your tablet, away from your workstation.

The ebook is provided in 2 formats:

- PDF (.pdf): perfect for reading on the screen or tablet.

- EPUB (.epub): perfect for reading on a tablet with a Kindle or iBooks app.

Many developers like to read the ebook on a tablet or iPad.

How can you get more help?

The lessons in this book were designed to be easy to read and follow.

Nevertheless, sometimes we need a little extra help.

A list of further reading resources is provided at the end of each lesson. These can be helpful if you are interested in learning more about the topic covered, such as fine-grained details of the standard library and API functions used.

The conclusions at the end of the book provide a complete list of websites and books that can help if you want to learn more about Python benchmarking and performance optimization, as well as the relevant parts of the Python standard library. It also lists places where you can go online and ask questions about Python benchmarking.

Finally, if you ever have questions about the lessons or code in this book, you can contact me any time and I will do my best to help. My contact details are provided at the end of the book.

How many pages is the book?

The PDF is 259 pages (US letter-sized pages).

Can you print the book?

Yes.

Although, I think it’s better to work through it on the screen.

- You can search, skip, and jump around really fast.

- You can copy and paste code examples.

- You can compare code output directly.

Is there digital rights management?

No.

The ebooks have no DRM.

Do you get FREE updates?

Yes.

I update all of my books often.

You can email me any time and I will send you the latest version for free.

Can you buy the book elsewhere?

Yes!

You can get a Kindle or paperback version from Amazon.

Many developers prefer to buy from the Kindle store on Amazon directly.

Can you get a paperback version?

Yes!

You can get a paperback version from Amazon.

In fact, it was an Amazon best-seller when it was released.

Can you read a sample?

Yes.

You can read a book sample via the Google Books “preview” feature or via the Amazon “look inside” feature:

Generally, if you like my writing style on SuperFastPython, then you will like the books.

Can you download the source code now?

The source code (.py) files are included in the .zip with the book.

Nevertheless, you can also download all of the code from the dedicated GitHub Project:

Does the code work on your operating system?

Yes.

Python benchmarking is built into the Python programming language and works equally well on:

- Windows

- macOS

- Linux

Does the code work on your hardware?

Yes.

Python benchmarking is agnostic to the underlying CPU hardware.

If you are running Python on a modern computer, then you will have support for benchmarking, e.g. Intel, AMD, ARM, and Apple Silicon CPUs are supported.

About the Author

Hi, I’m Jason Brownlee, Ph.D.

I’m a Python developer, husband, and father to two boys.

I want to share something with you.

I am obsessed with Python concurrency, but I wasn’t always this way.

My background is in Artificial Intelligence and I have a few fancy degrees and past job titles to prove it.

You can see my LinkedIn profile here:

- Jason Brownlee LinkedIn Profile

(follow me if you like)

So what?

Well, AI and machine learning have been hot for the last decade. I have spent that time as a Python machine learning developer:

- Working on a range of predictive modeling projects.

- Writing more than 1,000+ tutorials.

- Authoring over 20+ books.

There’s one thing about machine learning in Python, your code must be fast.

Really fast.

Modeling code is already generally fast, built on top of C and Fortran code libraries.

But you know how it is on real projects…

You always have to glue bits together, wrap the fast code and run it many times, and so on.

Making code run fast requires Python concurrency and I have spent most of the last decade using all the different types of Python concurrency available.

Including threading, multiprocessing, asyncio, and the suite of popular libraries.

I know my way around Python concurrency and I am deeply frustrated at the bad wrap it has.

This is why I started SuperFastPython.com where you can find hundreds of free tutorials on Python concurrency.

And this is why I wrote this book.

Praise for Super Fast Python

Python developers write to me all the time and let me know how helpful my tutorials and books have been.

Below are some select examples posted to LinkedIn.

What Are You Waiting For?

Stop reading outdated StackOverflow answers.

Learn Python concurrency correctly, step-by-step.

Start today.

Buy now and get your copy in seconds!