Unlock Faster NumPy

…and use all CPU cores

No fancy third-party libraries.

Just faster NumPy on one machine. Get results like:

- Matrix multiplication that is 2.7x faster

- Array initialization that is up to 3.2x faster

- Array sharing that is up to 516.91x faster

Don’t suffer slow NumPy. Develop faster programs with modern concurrency.

Heard enough? Jump to the book,

Otherwise read on…

Paul Vasquez

Your book “Concurrent NumPy in Python” has been an invaluable tool for me

NumPy is Everywhere

NumPy is the most common third-party Python library for working with arrays of numbers.

The importance of NumPy lies in its ability to significantly enhance our capabilities when working with numerical and scientific data.

- NumPy is efficient (it's most important quality)

- NumPy is easy to use

- NumPy is the center of an ecosystem

NumPy is used directly when loading and working with numerical datasets and is used as the way to move data in and out of other third-party libraries.

There is little we can get done in modern Python programs without NumPy, such as:

- Machine learning libraries like scikit-learn

- Deep learning libraries like TensorFlow and PyTorch

- Data analysis with libraries like Pandas and Matplotlib

- Scientific computing with libraries like SciPy.

- And so many more...

Given the wide use of NumPy, it is essential we know how to get the most out of our system when using it.

Faster NumPy With Concurrency

NumPy programs can be made much faster by adding concurrency.

Thankfully, concurrency was not an afterthought in NumPy, allowing us to take full advantage of the capabilities of modern multicore hardware.

There is a lot of confusion when it comes to speeding up NumPy using concurrency, for example:

- Did you know that many NumPy functions are already multithreaded using BLAS threads?

- Did you know that most NumPy functions release the GIL so we can use Python threads directly?

- Did you know that sometimes we can achieve better results with thread pools than with BLAS threads?

- Did you know that we can use shared memory in the Python stdlib to share arrays between processes?

Knowing how to use NumPy concurrency can offer dramatic speed-ups in these 3 main areas.

Let's take a closer look at each in turn.

1. Faster NumPy With BLAS Threads

BLAS and LAPACK are general specifications for linear algebra math functions.

Many free or open-source libraries implement these specs, such as OpenBLAS, MKL, ATLAS, and more.

We don’t need to know much about these libraries other than: they are really fast!

- They are implemented in C and FORTRAN under the covers.

- They use platform-specific tricks and CPU instructions to make code faster.

- They implement functions using multithreaded algorithms.

One of these BLAS libraries is installed automatically when we install NumPy and NumPy will use it to dramatically speed up some array operations.

Knowing about BLAS and how to configure the number of BLAS threads that NumPy will use in our programs can offer a bis speed-up, such as:

- Matrix multiplication that is 2.7x faster

- Matrix math functions that are up to 3.1x faster

- Matrix solvers that are up to 2.2x faster

- Matrix decompositions that are up to 2.2x faster

To get these speed-ups, you need to know:

- How to determine which NumPy functions call down to the installed BLAS library.

- How to determine which BLAS functions are multithreaded (you now know some).

- How to determine which BLAS library you have installed.

- How to configure the number of threads in your installed BLAS library

2. Faster NumPy With Thread Pools

The Python interpreter is implemented using a Global Interpreter Lock or GIL.

This ensures that the Python interpreter is thread-safe, but prevents more than one Python thread from running at a time, while the lock is held.

Importantly, NumPy will release the GIL.

Most function calls we make using the NumPy API release the GIL. This means that we can execute multiple Python threads concurrently and achieve full parallelism.

It allows us to multithread tasks with NumPy arrays and achieve a dramatic speed-up such as:

- Array initialization that is up to 3.2x faster

- Element-wise array arithmetic that is up to 1.3x faster

- Element-wise array math functions that are up to 2.0x faster

- Creating arrays of random numbers that are up to 4.71x faster

To get these speed-ups, you need to know:

- Python threads achieve full parallelism when the GIL is released (you now know this)

- That NumPy functions will release the GIL (you now know this, few developers do)

- How to know which NumPy array tasks can be made multithreaded with Python threads.

- How to use a Python thread pool like the ThreadPoolExecutor to multithread NumPy array tasks

3. Faster NumPy By Mixing Worker Pools and BLAS

As we have seen, many algorithms in NumPy use a multithreaded implementation via an installed BLAS library like OpenBLAS.

This is wonderful.

Nevertheless, sometimes we can get better performance by carefully choosing when to use BLAS threads and when to use another type of concurrency, such as a thread pool or a process pool.

In fact, sometimes we may even get better performance using a mixture of thread or process pools and BLAS threads.

This applies to programs that involve tasks on many NumPy arrays, e.g. task-level concurrency, and operations on each array, e.g. operation-level concurrency.

- Task-Level Concurrency: A program that works with many NumPy arrays that can be parallelized with thread pools and process pools.

- Operation-Level Concurrency: A program where multithreaded math functions are executed on NumPy arrays using functions implemented in BLAS with BLAS threads.

Tuning the balance of task and operation-level concurrency can give better performance, for example:

- Multiple array handling that is up to 3.5x faster

- Multiple independent array handling that is up to 3.61x faster

To get these speed-ups, you need to know:

- About the difference between task and operation level concurrency (you now know)

- How to develop a benchmark suite to compare the performance with just thread or process pools, with just BLAS treads, and with combinations of both.

- When to choose thread pools over process pools and the cost of making the wrong decision.

4. Faster NumPy By Efficient Array Sharing

Sharing data between Python processes is typically very slow.

It is slow because data needs to be shared using inter-process communication methods, where data is pickled by the sender, transmitted, and unpickled by the receiver.

This means if we execute tasks with NumPy arrays in multiple Python processes, e.g. with a Process pool, and we need to share arrays between processes, we may lose all the benefits of concurrency to the cost of inter-process communication.

Luckily, there are many different ways to share NumPy arrays between Python processes. At least 9 methods by my count.

Some methods that involve making a copy of an array as part of sharing it can be very fast, for example:

- Share array between processes via inheritance that is up to 516.91x faster

Other times we need multiple Python processes to operate on the same shared array.

There are modern ways to share a NumPy array that are also very fast, for example:

- Share array via a memory-mapped file that is up to 4.92x faster

Some developers are shocked that such options exist.

To get these speedups, you need to know:

- All of the methods available in the stdlib for sharing a NumPy array between processes (you now know there are at least 9 methods)

- Which methods will make a copy of the array and which methods allow multiple processes to work on the same array

- How to use each method to actually share a NumPy array.

- The performance of each method compared to the other methods.

Next, let's consider the penalty of naively bringing concurrency to our NumPy programs.

DANGER of Using the Wrong Type of Concurrency with NumPy

We can add concurrency to a NumPy program to improve its performance.

Nevertheless, there is a danger in naively adding concurrency to a NumPy program.

This is for many reasons, such as some NumPy math functions that are already multithreaded, not knowing how to configure multithreaded NumPy functions, using the wrong type of concurrency, and missing opportunities to improve the performance of NumPy math functions.

We cannot afford to have CPU cores sit idle

But worse still, we cannot afford to make our NumPy-based Python programs slower by using the wrong type of concurrency.

For example:

Just Use a Thread Pool…

We might naively use a thread pool to apply a math function to multiple arrays in parallel.

Seems sensible, except:

- We can cripple math functions that are already multithreaded if we use them in a thread pool, causing the operating system to thrash and spend too long context switching between threads.

Just Assume The BLAS Function is Good Enough…

We might know that a given NumPy function uses a multithreaded algorithm via the BLAS library.

Nothing else is needed. Free parallelism, except:

- We may miss opportunities to tune the number of threads used by the function and get better performance by having one thread per logical CPU or one thread per physical CPU core in our system.

Just Use Multiprocessing…

We may know that Python multiprocessing is the best approach for CPU-bound tasks, a rule that holds generally.

Using this rule, we may naively assume that it holds when using NumPy and perform all of our NumPy array operations in a process pool.

Seems very reasonable, except:

- We may have chosen the wrong type of concurrency for the use case that could be tens or hundreds of times slower than the alternative of using threads.

Just Skip Over a Non-BLAS NumPy Function…

We may know that a given math function is not implemented using a multithreaded BLAS function.

Oh well, the function is executed normally, ignoring concurrency.

Fair enough, except:

- We may miss opportunities to perform the same operation concurrently on separate parts of the same array in a thread pool offering a big performance boost.

Just Assume That Sharing NumPy Arrays is Slow…

We may know from our experience with multiprocessing that sharing arrays is slow.

Our program may require moving arrays between different Python processes.

That's just the cost of business, except:

- We may achieve much worse performance by ignoring modern fast ways to copy arrays in memory and modern approaches of shared memory-backed arrays that can be hundreds of times faster.

Take NumPy Concurrency Seriously

We cannot approach adding concurrency to our NumPy programs naively.

Work is required to know what method to use and when.

There is a lot to know about how to speed up NumPy programs running on one machine with thread and process-based concurrency, and it seems no one is talking about it.

Don't worry, I've done the work for you.

I wrote this book to show you how.

Introducing:

"Concurrent NumPy in Python"

Faster NumPy With BLAS, Python Threads, and Multiprocessing

"Concurrent NumPy in Python" is my new book that will teach you how to make your NumPy programs faster using concurrency, from scratch.

This book distills only what you need to know to get started and be effective with concurrent NumPy, super fast.

It's exactly how I would teach you concurrent NumPy if we were sitting together, pair programming.

Technical Details:

- 32 tutorials taught by example with full working code listings.

- 96 .py code files included that you can use as templates and extend.

- 341 pages for on-screen reading open next to your IDE or editor.

- 2 formats (PDF and EPUB) for screen, tablet, and Kindle reading.

- 1.7 megabyte .zip download that contains the ebook and code.

Everything you need to get started, then get really good at concurrent NumPy, all in one book.

"Concurrent NumPy in Python" will lead you on the path from a Python developer frustrated with slow NumPy code to a developer who can confidently develop concurrent NumPy tasks and programs.

- No fluff.

- Just concepts and code.

- With full working examples.

The book is divided into 32 practical tutorials.

The idea is you can read and work through one or two tutorials per day, and become capable in about one month.

It is the shortest and most effective path that I know of transforming you into a concurrent NumPy Python developer.

Choose Your Package:

BOOK

You get the book:

- Concurrent NumPy

PDF and EPUB formats

Includes all source code files

BOOKSHELF

BEST VALUE

You get everything (15+ books):

- Threading Jump-Start

- Multiprocessing Jump-Start

- Asyncio Jump-Start

- ThreadPool Jump-Start

- Pool Jump-Start

- ThreadPoolExecutor Jump-Start

- ProcessPoolExecutor Jump-Start

- Threading Interview Questions

- Multiprocessing Interview Questions

- Asyncio Interview Questions

- Executors Interview Questions

- Concurrent File I/O

- Concurrent NumPy

- Python Benchmarking

- Python Asyncio Mastery

Bonus, you also get:

- Concurrent For Loops Guide

- API Mind Maps (4)

- API Cheat Sheets (7)

That is $210 of Value!

(you get a 10.95% discount)

All prices are in USD.

(also get the book from Amazon, GumRoad, or GooglePlay stores)

See What Customers Are Saying:

Paul Vasquez

Your book “Concurrent NumPy in Python” has been an invaluable tool for me. The clarity with which you present concurrent programming and the effectiveness of the practical examples have significantly improved my understanding of the topic. Without a doubt, it’s an outstanding work that has elevated my Python skills to a new level.

G. M. Zhao

Very practical, carefully designed, and benefited a lot.

Tan Boon Chiat

Pankaj Kushwaha

Thank you Jason Brownlee, I just finished reading ‘Concurrent NumPy in Python’ and I’m really excited about it! The explanations were clear, and I learned a lot. Thanks for creating such awesome Python content!

You Get 32 Laser-Focused Tutorials

This book is designed to bring you up to speed with how to use concurrent NumPy as fast as possible.

As such, it is not exhaustive. There are many topics that are interesting or helpful but are not on the critical path to getting you productive fast.

This book is divided into 4 parts, they are:

- Part 01: Background. Review of NumPy and Python concurrency and why concurrency is an important topic for NumPy tasks.

- Part 02: NumPy and BLAS Threads. Tutorials on how to get the most out of NumPy using BLAS threads.

- Part 03: NumPy and Python Threads. Tutorials on how to get the most out of NumPy using Python threads.

- Part 04: NumPy and Python Multiprocessing. Tutorials on how to get the most out of NumPy using Python multiprocessing.

The book is further divided into 32 tutorials across the four parts, they are:

Part 01: Background:

- Tutorial 01: Introduction.

- Tutorial 02: Tour of NumPy.

- Tutorial 03: Tour of Python Concurrency.

- Tutorial 04: NumPy Concurrency Matters.

Part 02: NumPy With BLAS Threads:

- Tutorial 05: What is BLAS for NumPy

- Tutorial 06: BLAS/LAPACK Libraries for NumPy

- Tutorial 07: Install BLAS Libraries for NumPy

- Tutorial 08: Check Installed BLAS Library for NumPy

- Tutorial 09: NumPy Functions That Use BLAS/LAPACK

- Tutorial 10: How to Configure the Number of BLAS Threads

- Tutorial 11: Parallel Matrix Multiplication

- Tutorial 12: Parallel Matrix Math Functions

- Tutorial 13: Parallel Matrix Solvers

- Tutorial 14: Parallel Matrix Decompositions

Part 03: NumPy With Python Threads:

- Tutorial 15: NumPy Releases the Global Interpreter Lock

- Tutorial 16: Parallel Array Fill

- Tutorial 17: Parallel Element-Wise Array Arithmetic

- Tutorial 18: Parallel Element-Wise Array Math Functions

- Tutorial 19: Parallel Arrays of Random Numbers

- Tutorial 20: Case Study: BLAS vs Python Threading

- Tutorial 21: Case Study: BLAS vs Python Multiprocessing

Part 04: NumPy With Python Multiprocessing:

- Tutorial 22: How to Share a NumPy Array Between Processes

- Tutorial 23: Share NumPy Array Via Global Variable

- Tutorial 24: Share NumPy Array Via Function Argument

- Tutorial 25: Share NumPy Array Via Pipe

- Tutorial 26: Share NumPy Array Via Queue

- Tutorial 27: Share NumPy Array Via ctype Array

- Tutorial 28: Share NumPy Array Via ctype RawArray

- Tutorial 29: Share NumPy Array Via SharedMemory

- Tutorial 30: Share NumPy Array Via Memory-Mapped File

- Tutorial 31: Share NumPy Array Via Manager

- Tutorial 32: Fastest Way To Share NumPy Array

Next, let's take a closer look at how tutorial lessons are structured.

Highly structured lessons on how you can get results

This book teaches concurrent NumPy by example.

Each lesson has a specific learning outcome and is designed to be completed in less than one hour.

Each lesson is also designed to be self-contained so that you can read the lessons out of order if you choose, such as dipping into topics in the future to solve specific programming problems.

The lessons were written with some intentional repetition of key APIs and concepts. These gentle reminders are designed to help embed the common usage patterns in your mind so that they become second nature.

We Python developers learn best from real and working code examples.

Next, let's look at what you will know after finishing the book.

Your Learning Outcomes

No more frustration with SLOW NumPy!

This book will transform you into a Python developer that can confidently bring concurrent NumPy tasks to your projects.

1. You will confidently use built-in multithreaded NumPy functions via BLAS libraries, including:

- How to install, check, configure BLAS libraries for use with NumPy functions.

- How to get the most out of BLAS multithreading for array multiplication and common math functions.

- How to get the most out of BLAS multithreading for matrix solvers and matrix decomposition functions.

2. You will confidently use Python threads with NumPy functions that release the GIL, including:

- How to efficiently fill NumPy arrays with initial or random values using Python threads.

- How to efficiently perform element-wise array arithmetic using Python threads.

- How to efficiently calculate common element-wise mathematical functions on arrays using Python threads.

3. You will confidently know how to choose between and mix BLAS and Python concurrency, including:

- How to mix BLAS threads and Python threading to get the best performance when working with many arrays.

- How to mix BLAS thread and Python multiprocessing to get the best performance when working with many arrays.

4. You will confidently know how to efficiently share NumPy arrays between Python processes.

- How to use a suite of techniques that copy and share NumPy arrays between Python processes and the benefits and limitations of each.

- How to effectively benchmark the performance of many techniques for sharing arrays between Python processes.

You will learn from code examples, not pages and pages of fluff.

Get your copy now:

All prices are in USD.

100% Money-Back Guarantee

(no questions asked)

I want you to actually learn concurrent NumPy so well that you can confidently use it on current and future projects.

I designed my book to read just like I'm sitting beside you, showing you how.

I want you to be happy. I want you to win!

I stand behind all of my materials. I know they get results and I'm proud of them.

Nevertheless, if you decide that my books are not a good fit for you, I'll understand.

I offer a 100% money-back guarantee, no questions asked.

To get a refund, contact me with your purchase name and email address.

Frequently Asked Questions

This section covers some frequently asked questions.

If you have any questions. Contact me directly. Any time about anything. I will do my best to help.

What are your prerequisites?

This book is designed for Python developers who want to discover how to develop programs with faster NumPy using concurrency.

Specifically, this book is for:

- Developers that can write simple Python programs.

- Developers that need better performance from current or future Python programs.

- Developers that are working with NumPy-based tasks.

This book does not require that you are an expert in the Python programming language, NumPy programming, or concurrency.

Specifically:

- You do not need to be an expert Python developer.

- You do not need to be an expert with NumPy.

- You do not need to be an expert in concurrency.

What version of Python do you need?

All code examples use Python 3.

Python 3.10+ to be exact.

Python 2.7 is not supported because it reached its "end of life" in 2020.

Are there code examples?

Yes.

There are 96 .py code files.

Most lessons have many complete, standalone, and fully working code examples.

The book is provided in a .zip file that includes a src/ directory containing all source code files used in the book.

How long will the book take you to finish?

Work at your own pace.

I recommend about one tutorial per day, over 32 days (1 month).

There's no rush and I recommend that you take your time.

This book is designed to be read linearly from start to finish, guiding you from being a Python developer at the start of the book to being a Python developer that can confidently use concurrency to dramatically speed up NumPy tasks in your project by the end of the book.

In order to avoid overload, I recommend completing one or two tutorials per day, such as in the evening or during your lunch break. This will allow you to complete the transformation in about one month.

I recommend maintaining a directory with all of the code you type from the tutorials in the book. This will allow you to use the directory as your own private code library, allowing you to copy-paste code into your projects in the future.

I recommend trying to adapt and extend the examples in the tutorials. Play with them. Break them. This will help you learn more about how the API works and why we follow specific usage patterns.

What format is the book?

You can read the book on your screen, next to your editor.

You can also read the book on your tablet, away from your workstation.

The ebook is provided in 2 formats:

- PDF (.pdf): perfect for reading on the screen or tablet.

- EPUB (.epub): perfect for reading on a tablet with a Kindle or iBooks app.

Many developers like to read the ebook on a tablet or iPad.

How can you get more help?

The lessons in this book were designed to be easy to read and follow.

Nevertheless, sometimes we need a little extra help.

A list of further reading resources is provided at the end of each lesson. These can be helpful if you are interested in learning more about the topic covered, such as fine-grained details of the standard library and API functions used.

The conclusions at the end of the book provide a complete list of websites and books that can help if you want to learn more about Python concurrency and the relevant parts of the Python standard library. It also lists places where you can go online and ask questions about Python concurrency.

Finally, if you ever have questions about the lessons or code in this book, you can contact me any time and I will do my best to help. My contact details are provided at the end of the book.

How many pages is the book?

The PDF is 341 pages (US letter-sized pages).

Can you print the book?

Yes.

Although, I think it's better to work through it on the screen.

- You can search, skip, and jump around really fast.

- You can copy and paste code examples.

- You can compare code output directly.

Is there digital rights management?

No.

The ebooks have no DRM.

Do you get FREE updates?

Yes.

I update all of my books often.

You can email me any time and I will send you the latest version for free.

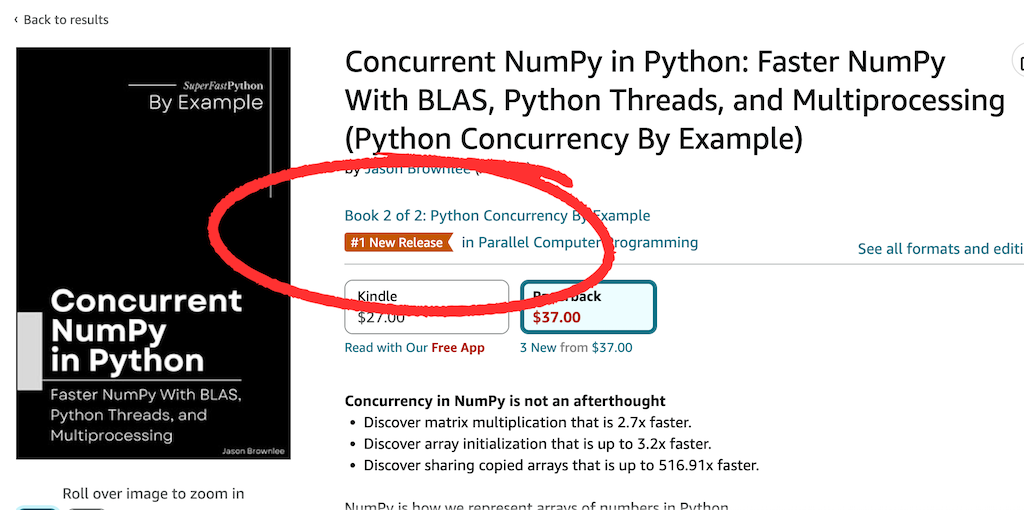

Can you buy the book elsewhere?

Yes!

You can get a Kindle or paperback version from Amazon.

Many developers prefer to buy from the Kindle store on Amazon directly.

In fact, it was a #1 Amazon Best Seller on the day it was released:

Can you get a paperback version?

Yes!

You can get a paperback version from Amazon.

Below is a photo of me holding the paperback version of the book.

Can you read a sample?

Yes.

You can read a book sample via the Google Books "preview" feature or via the Amazon "look inside" feature:

Generally, if you like my writing style on SuperFastPython, then you will like the books.

Can you download the source code now?

The source code (.py) files are included in the .zip with the book.

Nevertheless, you can also download all of the code from the dedicated GitHub Project:

Does concurrent NumPy work on your operating system?

Yes.

Python concurrency is built into the Python programming language and works equally well on:

- Windows

- macOS

- Linux

Does concurrent NumPy work on your hardware?

Yes.

Python concurrency is agnostic to the underlying CPU hardware.

If you are running Python on a modern computer, then you will have support for concurrency, e.g. Intel, AMD, ARM, and Apple Silicon CPUs are supported.

About the Author

Hi, I'm Jason Brownlee, Ph.D.

I'm a Python developer, husband, and father to two boys.

I want to share something with you.

I am obsessed with Python concurrency, but I wasn't always this way.

My background is in Artificial Intelligence and I have a few fancy degrees and past job titles to prove it.

You can see my LinkedIn profile here:

- Jason Brownlee LinkedIn Profile

(follow me if you like)

So what?

Well, AI and machine learning have been hot for the last decade. I have spent that time as a Python machine learning developer:

- Working on a range of predictive modeling projects.

- Writing more than 1,000+ tutorials.

- Authoring over 20+ books.

There's one thing about machine learning in Python, your code must be fast.

Really fast.

Modeling code is already generally fast, built on top of C and Fortran code libraries.

But you know how it is on real projects…

You always have to glue bits together, wrap the fast code and run it many times, and so on.

Making code run fast requires Python concurrency and I have spent most of the last decade using all the different types of Python concurrency available.

Including threading, multiprocessing, asyncio, and the suite of popular libraries.

I know my way around Python concurrency and I am deeply frustrated at the bad wrap it has.

This is why I started SuperFastPython.com where you can find hundreds of free tutorials on Python concurrency.

And this is why I wrote this book.

Praise for Super Fast Python

Python developers write to me all the time and let me know how helpful my tutorials and books have been.

Below are some select examples posted to LinkedIn.

What Are You Waiting For?

Stop reading outdated StackOverflow answers.

Learn Python concurrency correctly, step-by-step.

Start today.

Buy now and get your copy in seconds!

Get your copy now:

All prices are in USD.