The ThreadPoolExecutor class in Python can be used to download multiple files at the same time.

This can dramatically speed-up the download process compared to downloading each file sequentially, one by one.

In this tutorial, you will discover how to concurrently download multiple files from the internet using threads in Python.

After completing this tutorial, you will know:

- How to download files from the internet one-by-one in Python and how slow it can be.

- How to use the ThreadPoolExecutor to manage a pool of worker threads.

- How to update the code to download multiple files at the same time and dramatically accelerate the process.

Let’s dive in.

How to Download Files From the Internet (slowly)

Downloading files from the internet is a common task.

For example:

- You may need to download all documents for offline reference.

- You might need to create a backup of all .zip files for a project.

- You might need a local copy of a file archive.

If you did not know how to code, then you might solve this problem by loading the webpage in a browser, then clicking each file in turn to save it to your hard drive.

For more than a few files, this could take a long time to first click each file, and then to wait for all downloads to complete.

Thankfully, we’re developers, so we can write a script to first discover all of the files on a webpage to download, then download and store them all locally.

Some examples of web pages that list files we might to download files include:

- All versions of the Beautiful Soup library version 4.

- All bot modifications for the Quake 1 computer game.

- All documents for Python version 3.9.7.

In these examples, there is a single HTML webpage that provides relative links to locally hosted files.

Ideally, the site would link to multiple small or modestly sized files and the server itself would allow multiple connections from each client.

This may not always be the case as most servers limit the number of connections per client to 10 or even 1 to prevent denial of service attacks.

We can develop a program to download the files one by one.

There are few parts to this program; for example:

- Download URL File

- Parse HTML for Files to Download

- Save Download to Local File

- Coordinate Download Process

- Complete Example

Let’s look at each piece in turn.

Note: we are only going to implement the most basic error handling. If you change the target URL, you may need to adapt the code for the specific details of the HTML and files you wish to download.

Download URL File

The first step is to download a file specified by a URL.

There are many ways to download a URL in Python. In this case, we will use the urlopen() function to open the connection to the URL and call the read() function to download the contents of the file into memory.

To ensure the connection is closed automatically once we are finished downloading, we will use a context manager, e.g. the with keyword.

You can learn more about opening and reading from URL connections in the Python API here:

The download_url() function below implements this, taking a URL and returning the contents of the file.

|

1 2 3 4 5 6 |

# load a file from a URL, returns content of downloaded file def download_url(urlpath): # open a connection to the server with urlopen(urlpath) as connection: # read the contents of the url as bytes and return it return connection.read() |

We will use this function to download the HTML page that lists the files and to download the contents of each file listed.

An improvement on this function would be to add a timeout on the connection, perhaps after a few seconds. This will throw an exception if the host does not respond and is a good practice when opening connections to remote servers.

Parse HTML for Files to Download

Once the HTML page of URLs is downloaded, we must parse it and extract all of the links to files.

I recommend the BeautifulSoup Python library anytime HTML documents need to be parsed.

If you’re new to BeautifulSoup, you can install it easily with your Python package manager, such as pip:

|

1 |

pip install beautifulsoup4 |

First, we must decode the raw data downloaded into ASCII text.

This can be achieved by calling the decode() function on the string of raw data and specifying a standard text format, such as UTF-8.

|

1 2 3 |

... # decode the provided content as ascii text html = content.decode('utf-8') |

Next, we can parse the text of the HTML document using BeautifulSoup using the default parser.

It is a good idea to use a more sophisticated parser that works the same on all platforms, but in this case, will use the default parser as it does not require you to install anything extra.

|

1 2 3 |

... # parse the document as best we can soup = BeautifulSoup(html, 'html.parser') |

We can then retrieve all <a href=""> HTML tags from the document as these will contain the URLs for the files we wish to download.

|

1 2 3 |

... # find all all of the <a href=""> tags in the document atags = soup.find_all('a') |

We can then iterate through all of the found tags and retrieve the contents of the href property on each, e.g. get the links to files from each tag. If the tag does not contain an href property (which would be odd, e.g. an anchor link), then we will instruct the function to return None.

This can be down in a list comprehension, giving us a list of URLs to files to download and possibly some None values.

|

1 2 3 |

... # get all href values (links) or None if not present (unlikely) return [t.get('href', None) for t in atags] |

Tying this all together, the get_urls_from_html() function below takes the downloaded HTML content and returns a list of file URLs.

|

1 2 3 4 5 6 7 8 9 10 |

# decode downloaded html and extract all <a href=""> links def get_urls_from_html(content): # decode the provided content as ascii text html = content.decode('utf-8') # parse the document as best we can soup = BeautifulSoup(html, 'html.parser') # find all all of the <a href=""> tags in the document atags = soup.find_all('a') # get all href values (links) or None if not present (unlikely) return [t.get('href', None) for t in atags] |

We will use this function to extract all of the files from the HTML page that lists the files we wish to download.

Save Download to Local File

We know how to download files and get links out of HTML. Another important piece we need is to save downloaded URLs to local files.

This can be done using the open() built-in Python function.

We will open the file in write mode and binary format, as we will likely be downloading .zip files or similar.

As with downloading URLs above, we will use the context manager (the with keyword) to ensure that the connection to the file is closed once we are finished writing the contents.

The save_file() function below implements this, taking the local path for saving the file and the content of the file downloaded from a URL.

|

1 2 3 4 5 6 |

# save provided content to the local path def save_file(path, data): # open the local file for writing with open(path, 'wb') as file: # write all provided data to the file file.write(data) |

You may want to add error handling here, such as a failure to write or the case of the file already existing locally.

We need to do some work in order to be able to use this function.

For example, we need to check that we have a link from the href property (that it’s not None), and that the URL we are trying to download is a file (e.g. has a .zip or .gz extension). This is some primitive error checking or filtering over the types of URLs we would like to download.

|

1 2 3 4 5 6 7 |

... # skip bad urls or bad filenames if link is None or link == '../': return # check for no file extension if not (link[-4] == '.' or link[-3] == '.' ): return |

HTML pages that list files typically include relative rather than absolute links.

That is, the links to the files to be downloaded will be relative to the HTML file. We must convert them to absolute links (with http://... at the front) in order to be able to download the files.

We can use the urljoin() function from the urllib.parse module to convert any relative URLs to absolute URLs for us so that we can download the file.

|

1 2 3 |

... # convert relative link to absolute link absurl = urljoin(url, link) |

The absolute URL can then be downloaded using our download_url() function developed above.

|

1 2 3 |

... # download the content of the file data = download_url(absurl) |

Next, we need to determine the name of the file from the URL that we will be saving locally. This can be achieved using the basename() function from the os.path module.

|

1 2 3 |

... # get the filename filename = basename(absurl) |

We can then determine the local path for saving the file by combining the local directory path with the filename. The join() function from the os.path module will do this for us correctly based on our platform (Unix, Windows, MacOS).

|

1 2 3 |

... # construct the output path outpath = join(path, filename) |

We now have enough information to save the file by calling our save_file() function.

|

1 2 3 |

... # save to file save_file(outpath, data) |

The download_url_to_file() function below ties this all together, taking the URL for the HTML page that lists files, a link to one file on that page to download, and the local path for saving files. It downloads the URL, saves it locally as a file, and returns the relative link and local file path in a tuple.

If the file was not saved, we return a tuple with just the relative URL and None for the local path.

We choose to return some information from this function so that the caller can report progress about the success or failure of downloading each file.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

# download one file to a local directory def download_url_to_file(url, link, path): # skip bad urls or bad filenames if link is None or link == '../': return (link, None) # check for no file extension if not (link[-4] == '.' or link[-3] == '.' ): return (link, None) # convert relative link to absolute link absurl = urljoin(url, link) # download the content of the file data = download_url(absurl) # get the filename filename = basename(absurl) # construct the output path outpath = join(path, filename) # save to file save_file(outpath, data) # return results return (link, outpath) |

Coordinate Download Process

Finally, we need to coordinate the overall process.

First, the URL for the HTML page must be downloaded using our download_url() function defined above.

|

1 2 3 |

... # download the html webpage data = download_url(url) |

Next, we need to create any directories on the local path where we have chosen to store the local files. We can do this using the makedirs() function from the os module and ignore the case where the directories already exist.

|

1 2 3 |

... # create a local directory to save files makedirs(path, exist_ok=True) |

Next, we will retrieve a list of all relative links to files listed on the HTML page by calling our get_urls_from_html() function, and report how many links were found.

|

1 2 3 4 5 |

... # parse html and retrieve all href urls listed links = get_urls_from_html(data) # report progress print(f'Found {len(links)} links in {url}') |

Finally, we will iterate through the list of links, download each to a local file with a call to our download_url_to_file() function, then report the success or failure of the download.

|

1 2 3 4 5 6 7 8 9 10 |

... # download each file on the webpage for link in links: # download the url to a local file link, outpath = download_url_to_file(url, link, path) # check for a link that was skipped if outpath is None: print(f'>skipped {link}') else: print(f'Downloaded {link} to {outpath}') |

Tying this together, the download_all_files() function below takes a URL to an HTML webpage that lists files and a local path to download files and downloads all files listed on the page, reporting progress along the way.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

# download all files on the provided webpage to the provided path def download_all_files(url, path): # download the html webpage data = download_url(url) # create a local directory to save files makedirs(path, exist_ok=True) # parse html and retrieve all href urls listed links = get_urls_from_html(data) # report progress print(f'Found {len(links)} links in {url}') # download each file on the webpage for link in links: # download the url to a local file link, outpath = download_url_to_file(url, link, path) # check for a link that was skipped if outpath is None: print(f'>skipped {link}') else: print(f'Downloaded {link} to {outpath}') |

Complete Example

We now have all of the elements to download all files listed on an HTML webpage.

Let’s test it out.

Choose one of the URLs listed above and use the functions that we have developed to download all files listed.

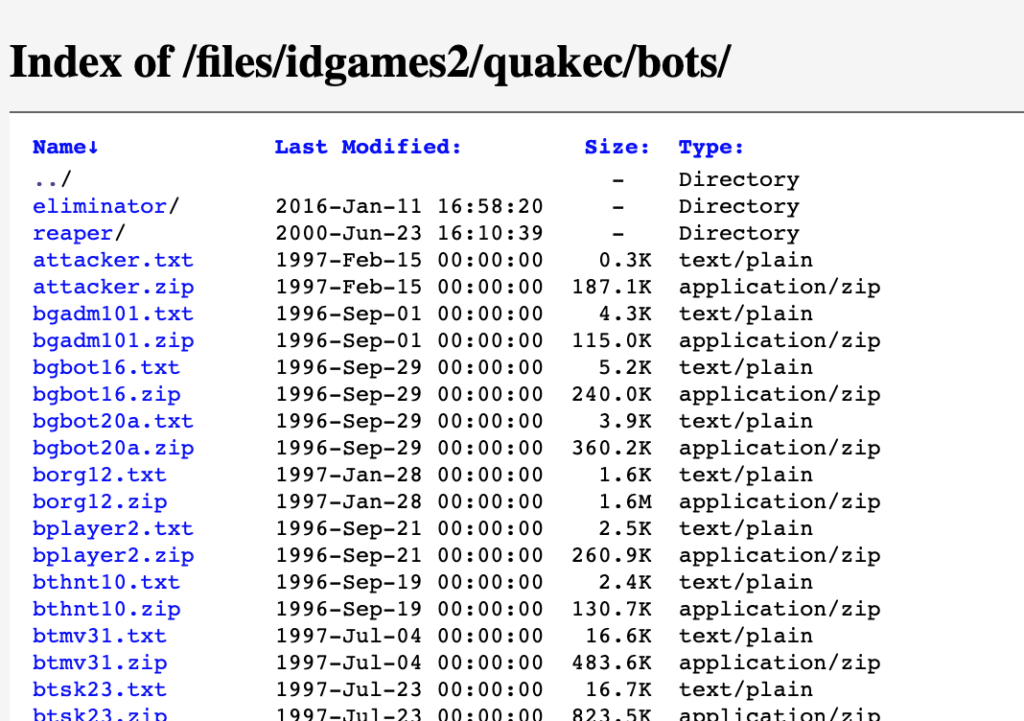

In this case, I will choose to download all bot modifications developed by third parties for the Quake 1 computer game.

|

1 2 3 |

... # url of html page that lists all files to download URL = 'https://www.quaddicted.com/files/idgames2/quakec/bots/' |

Below is an example of what this webpage looks like.

We will save all files in a local directory under a tmp/ subdirectory.

|

1 2 3 |

... # local directory to save all files on the html page PATH = 'tmp' |

We can then call the download_all_files()function that we developed above to kick off the download process.

|

1 2 3 |

... # download all files on the html webpage download_all_files(URL, PATH) |

Tying this together, the complete example of downloading all files on the HTML webpage is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 |

# SuperFastPython.com # download all files from a website sequentially from os import makedirs from os.path import basename from os.path import join from urllib.request import urlopen from urllib.parse import urljoin from bs4 import BeautifulSoup # load a file from a URL, returns content of downloaded file def download_url(urlpath): # open a connection to the server with urlopen(urlpath) as connection: # read the contents of the url as bytes and return it return connection.read() # decode downloaded html and extract all <a href=""> links def get_urls_from_html(content): # decode the provided content as ascii text html = content.decode('utf-8') # parse the document as best we can soup = BeautifulSoup(html, 'html.parser') # find all all of the <a href=""> tags in the document atags = soup.find_all('a') # get all href values (links) or None if not present (unlikely) return [t.get('href', None) for t in atags] # save provided content to the local path def save_file(path, data): # open the local file for writing with open(path, 'wb') as file: # write all provided data to the file file.write(data) # download one file to a local directory def download_url_to_file(url, link, path): # skip bad urls or bad filenames if link is None or link == '../': return (link, None) # check for no file extension if not (link[-4] == '.' or link[-3] == '.' ): return (link, None) # convert relative link to absolute link absurl = urljoin(url, link) # download the content of the file data = download_url(absurl) # get the filename filename = basename(absurl) # construct the output path outpath = join(path, filename) # save to file save_file(outpath, data) # return results return (link, outpath) # download all files on the provided webpage to the provided path def download_all_files(url, path): # download the html webpage data = download_url(url) # create a local directory to save files makedirs(path, exist_ok=True) # parse html and retrieve all href urls listed links = get_urls_from_html(data) # report progress print(f'Found {len(links)} links in {url}') # download each file on the webpage for link in links: # download the url to a local file link, outpath = download_url_to_file(url, link, path) # check for a link that was skipped if outpath is None: print(f'>skipped {link}') else: print(f'Downloaded {link} to {outpath}') # url of html page that lists all files to download URL = 'https://www.quaddicted.com/files/idgames2/quakec/bots/' # local directory to save all files on the html page PATH = 'tmp' # download all files on the html webpage download_all_files(URL, PATH) |

Running the example will first download the HTML webpage that lists all files, then download each file listed on the page into the tmp/ directory.

This will take a long time, perhaps 3-4 minutes, as the files are downloaded one at a time.

As the program runs, it will report useful progress.

First, it comments that 113 links were found on the page and that some directories were skipped. It then reports the filenames and local paths of each file saved.

|

1 2 3 4 5 6 7 8 9 10 11 |

Found 113 links in https://www.quaddicted.com/files/idgames2/quakec/bots/ >skipped ../ >skipped eliminator/ >skipped reaper/ Downloaded attacker.txt to tmp/attacker.txt Downloaded attacker.zip to tmp/attacker.zip Downloaded bgadm101.txt to tmp/bgadm101.txt Downloaded bgadm101.zip to tmp/bgadm101.zip Downloaded bgbot16.txt to tmp/bgbot16.txt Downloaded bgbot16.zip to tmp/bgbot16.zip ... |

How long did it take to run on your computer?

Let me know in the comments below.

Next, we will look at the ThreadPoolExecutor class that can be used to create a pool of worker threads that will allow us to speed up this download process.

Run loops using all CPUs, download your FREE book to learn how.

How to Create a Pool of Worker Threads With ThreadPoolExecutor

We can use the ThreadPoolExecutor class to speed up the download of multiple files listed on an HTML webpage.

The ThreadPoolExecutor class is provided as part of the concurrent.futures module for easily running concurrent tasks.

The ThreadPoolExecutor provides a pool of worker threads, which is different from the ProcessPoolExecutor that provides a pool of worker processes.

Generally, ThreadPoolExecutor should be used for concurrent IO-bound tasks, like downloading URLs, and the ProcessPoolExecutor should be used for concurrent CPU-bound tasks, like calculating.

Using the ThreadPoolExecutor was designed to be easy and straightforward. It is like the “automatic mode” for Python threads.

- Create the thread pool by calling ThreadPoolExecutor().

- Submit tasks and get futures by calling submit().

- Wait and get results as tasks complete by calling as_completed().

- Shut down the thread pool by calling shutdown()

Create the Thread Pool

First, a ThreadPoolExecutor instance must be created.

By default, it will create a pool of threads that is equal to the number of logical CPU cores in your system plus four.

This is good for most purposes.

|

1 2 3 |

... # create a thread pool with the default number of worker threads pool = ThreadPoolExecutor() |

You can run tens to hundreds of concurrent IO-bound threads per CPU, although perhaps not thousands or tens of thousands.

You can specify the number of threads to create in the pool via the max_workers argument; for example:

|

1 2 3 |

... # create a thread pool with 10 worker threads pool = ThreadPoolExecutor(max_workers=10) |

Submit Tasks to the Thread Pool

Once created, we can send tasks into the pool to be completed using the submit() function.

This function takes the name of the function to call any and all arguments and returns a Future object.

The Future object is a promise to return the results from the task (if any) and provides a way to determine if a specific task has been completed or not.

|

1 2 3 |

... # submit a task future = pool.submit(my_task, arg1, arg2, ...) |

The return from a function executed by the thread pool can be accessed via the result() function on the future object. It will wait until the result is available, if needed, or return immediately if the result is available.

For example:

|

1 2 3 |

... # get the result from a future result = future.result() |

Get Results as Tasks Complete

The beauty of performing tasks concurrently is that we can get results as they become available rather than waiting for tasks to be completed in the order they were submitted.

The concurrent.futures module provides an as_completed() function that we can use to get results for tasks as they are completed, just like its name suggests.

We can call the function and provide it a list of future objects created by calling submit()and it will return future objects as they are completed in whatever order.

For example, we can use a list comprehension to submit the tasks and create the list of future objects:

|

1 2 3 |

... # submit all tasks into the thread pool and create a list of futures futures = [pool.submit(my_func, task) for task in tasks] |

Then get results for tasks as they complete in a for loop:

|

1 2 3 4 5 6 |

... # iterate over all submitted tasks and get results as they are available for future in as_completed(futures): # get the result result = future.result() # do something with the result... |

Shutdown the Thread Pool

Once all tasks are completed, we can close down the thread pool, which will release each thread and any resources it may hold (e.g. the stack space).

|

1 2 3 |

... # shutdown the thread pool pool.shutdown() |

An easier way to use the thread pool is via the context manager (the with keyword), which ensures it is closed automatically once we are finished with it.

|

1 2 3 4 5 6 7 8 9 10 |

... # create a thread pool with ThreadPoolExecutor(max_workers=10) as pool: # submit tasks futures = [pool.submit(my_func, task) for task in tasks] # get results as they are available for future in as_completed(futures): # get the result result = future.result() # do something with the result... |

Now that we are familiar with ThreadPoolExecutor and how to use it, let’s look at how we can adapt our program for downloading URLs to make use of it.

How to Download Multiple Files Concurrently

The program for downloading URLs to file can be adapted to use the ThreadPoolExecutor with very little change.

The download_all_files() function currently enumerates the list of links extracted from the HTML page and calls the download_url_to_file() function for each.

This loop can be updated to submit() tasks to a ThreadPoolExecutor object, and then we can wait on the future objects via a call to as_completed() and report progress.

For example:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

... # create the pool of worker threads with ThreadPoolExecutor(max_workers=20) as exe: # dispatch all download tasks to worker threads futures = [exe.submit(download_url_to_file, url, link, path) for link in links] # report results as they become available for future in as_completed(futures): # retrieve result link, outpath = future.result() # check for a link that was skipped if outpath is None: print(f'>skipped {link}') else: print(f'Downloaded {link} to {outpath}') |

Here, we use 20 threads for up to 20 concurrent connections to the server that hosts the files. This may need to be adjusted depending on the number of concurrent connections supported by the server from which you wish to download files.

The updated version of the download_all_files() function that uses the ThreadPoolExecutor is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

# download all files on the provided webpage to the provided path def download_all_files(url, path): # download the html webpage data = download_url(url) # create a local directory to save files makedirs(path, exist_ok=True) # parse html and retrieve all href urls listed links = get_urls_from_html(data) # report progress print(f'Found {len(links)} links in {url}') # create the pool of worker threads with ThreadPoolExecutor(max_workers=20) as exe: # dispatch all download tasks to worker threads futures = [exe.submit(download_url_to_file, url, link, path) for link in links] # report results as they become available for future in as_completed(futures): # retrieve result link, outpath = future.result() # check for a link that was skipped if outpath is None: print(f'>skipped {link}') else: print(f'Downloaded {link} to {outpath}') |

Tying this together, the complete example of downloading all quake 1 bots concurrently using the ThreadPoolExecutor is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 |

# SuperFastPython.com # download all files from a website sequentially from os import makedirs from os.path import basename from os.path import join from urllib.request import urlopen from urllib.parse import urljoin from concurrent.futures import ThreadPoolExecutor from concurrent.futures import as_completed from bs4 import BeautifulSoup # load a file from a URL, returns content of downloaded file def download_url(urlpath): # open a connection to the server with urlopen(urlpath) as connection: # read the contents of the url as bytes and return it return connection.read() # decode downloaded html and extract all <a href=""> links def get_urls_from_html(content): # decode the provided content as ascii text html = content.decode('utf-8') # parse the document as best we can soup = BeautifulSoup(html, 'html.parser') # find all all of the <a href=""> tags in the document atags = soup.find_all('a') # get all href values (links) or None if not present (unlikely) return [t.get('href', None) for t in atags] # save provided content to the local path def save_file(path, data): # open the local file for writing with open(path, 'wb') as file: # write all provided data to the file file.write(data) # download one file to a local directory def download_url_to_file(url, link, path): # skip bad urls or bad filenames if link is None or link == '../': return (link, None) # check for no file extension if not (link[-4] == '.' or link[-3] == '.' ): return (link, None) # convert relative link to absolute link absurl = urljoin(url, link) # download the content of the file data = download_url(absurl) # get the filename filename = basename(absurl) # construct the output path outpath = join(path, filename) # save to file save_file(outpath, data) # return results return (link, outpath) # download all files on the provided webpage to the provided path def download_all_files(url, path): # download the html webpage data = download_url(url) # create a local directory to save files makedirs(path, exist_ok=True) # parse html and retrieve all href urls listed links = get_urls_from_html(data) # report progress print(f'Found {len(links)} links in {url}') # create the pool of worker threads with ThreadPoolExecutor(max_workers=20) as exe: # dispatch all download tasks to worker threads futures = [exe.submit(download_url_to_file, url, link, path) for link in links] # report results as they become available for future in as_completed(futures): # retrieve result link, outpath = future.result() # check for a link that was skipped if outpath is None: print(f'>skipped {link}') else: print(f'Downloaded {link} to {outpath}') # url of html page that lists all files to download URL = 'https://www.quaddicted.com/files/idgames2/quakec/bots/' # local directory to save all files on the html page PATH = 'tmp' # download all files on the html webpage download_all_files(URL, PATH) |

Running the example will first download and parse the HTML page that lists all of the local files.

Each file on the page is then downloaded concurrently, with up to 20 files being downloaded at the same time.

This dramatically speeds up the task, taking 10-15 seconds depending on your internet connection, compared to 3-4 minutes for the sequential version. That is about a 33x speedup.

Progress is reported like last time, but we can see that the files are reported out of order.

Here, we can see that the smaller .txt files finish before any of the .zip files.

|

1 2 3 4 5 6 7 8 9 10 11 |

Found 113 links in https://www.quaddicted.com/files/idgames2/quakec/bots/ >skipped ../ >skipped eliminator/ >skipped reaper/ Downloaded bgadm101.txt to tmp/bgadm101.txt Downloaded attacker.txt to tmp/attacker.txt Downloaded bgbot16.txt to tmp/bgbot16.txt Downloaded bgbot20a.txt to tmp/bgbot20a.txt Downloaded borg12.txt to tmp/borg12.txt Downloaded bplayer2.txt to tmp/bplayer2.txt ... |

How long did it take to run on your computer?

Let me know in the comments below.

Free Python ThreadPoolExecutor Course

Download your FREE ThreadPoolExecutor PDF cheat sheet and get BONUS access to my free 7-day crash course on the ThreadPoolExecutor API.

Discover how to use the ThreadPoolExecutor class including how to configure the number of workers and how to execute tasks asynchronously.

Extensions

This section lists ideas for extending the tutorial.

- Add connection timeout: Update the download_url()function to add a timeout to the connection and handle the exception if thrown.

- Add file saving error handling: Update save_file()to handle the case of not being able to write the file and/or a file already existing at that location.

- Only download relative URLs: Add a check for the extracted links to ensure that the program only downloads relative URLs and ignores absolute URLs or URLs for a different host.

Share your extensions in the comments below; it would be great to see what you come up with.

Overwhelmed by the python concurrency APIs?

Find relief, download my FREE Python Concurrency Mind Maps

Further Reading

This section provides additional resources that you may find helpful.

Books

- ThreadPoolExecutor Jump-Start, Jason Brownlee, (my book!)

- Concurrent Futures API Interview Questions

- ThreadPoolExecutor Class API Cheat Sheet

I also recommend specific chapters from the following books:

- Effective Python, Brett Slatkin, 2019.

- See Chapter 7: Concurrency and Parallelism

- Python in a Nutshell, Alex Martelli, et al., 2017.

- See: Chapter: 14: Threads and Processes

Guides

- Python ThreadPoolExecutor: The Complete Guide

- Python ProcessPoolExecutor: The Complete Guide

- Python Threading: The Complete Guide

- Python ThreadPool: The Complete Guide

APIs

References

Takeaways

In this tutorial, you learned how to download multiple files concurrently using a pool of worker threads in Python. You learned:

- How to download files from the internet sequentially in Python and how slow it can be.

- How to use the ThreadPoolExecutor to manage a pool of worker threads.

- How to update the code to download multiple files at the same time and dramatically accelerate the process.

Do you have any questions?

Leave your question in a comment below and I will reply fast with my best advice.

Photo by Berend Verheijen on Unsplash

Nice tutorial! Learned here when to use ThreadPool, I am sure it will be useful soon 🙂

Thanks, I’m happy to hear that!