Python Threading provides concurrency in Python with native threads.

The threading API uses thread-based concurrency and is the preferred way to implement concurrency in Python (along with asyncio). With threading, we perform concurrent blocking I/O tasks and calls into C-based Python libraries (like NumPy) that release the Global Interpreter Lock.

This book-length guide provides a detailed and comprehensive walkthrough of the Python Threading API.

Some tips:

- You may want to bookmark this guide and read it over a few sittings.

- You can download a zip of all code used in this guide.

- You can get help, ask a question in the comments or email me.

- You can jump to the topics that interest you via the table of contents (below).

Let’s dive in.

Python Threads

So what are threads and why do we care?

What Are Threads

A thread refers to a thread of execution in a computer program.

Each program is a process and has at least one thread that executes instructions for that process.

Thread: The operating system object that executes the instructions of a process.

— PAGE 273, THE ART OF CONCURRENCY, 2009.

When we run a Python script, it starts an instance of the Python interpreter that runs our code in the main thread. The main thread is the default thread of a Python process.

We may develop our program to perform tasks concurrently, in which case we may need to create and run new threads. These will be concurrent threads of execution without our program, such as:

- Executing function calls concurrently.

- Executing object methods concurrently.

A Python thread is an object representation of a native thread provided by the underlying operating system.

When we create and run a new thread, Python will make system calls on the underlying operating system and request a new thread be created and to start running the new thread.

This highlights that Python threads are real threads, as opposed to simulated software threads, e.g. fibers or green threads.

The code in new threads may or may not be executed in parallel (at the same time), even though the threads are executed concurrently.

There are a number of reasons for this, such as:

- The underlying hardware may or may not support parallel execution (e.g. one vs multiple CPU cores).

- The Python interpreter may or may not permit multiple threads to execute in parallel.

This highlights the distinction between code that can run out of order (concurrent) from the capability to execute simultaneously (parallel).

- Concurrent: Code that can be executed out of order.

- Parallel: Capability to execute code simultaneously.

Next, let’s consider the important differences between threads and processes.

Thread vs Process

A process refers to a computer program.

Each process is in fact one instance of the Python interpreter that executes Python instructions (Python byte-code), which is a slightly lower level than the code you type into your Python program.

Process: The operating system’s spawned and controlled entity that encapsulates an executing application. A process has two main functions. The first is to act as the resource holder for the application, and the second is to execute the instructions of the application.

— PAGE 271, THE ART OF CONCURRENCY, 2009.

The underlying operating system controls how new processes are created. On some systems, that may require spawning a new process, and on others, it may require that the process is forked. The operating-specific method used for creating new processes in Python is not something we need to worry about as it is managed by your installed Python interpreter.

A thread always exists within a process and represents the manner in which instructions or code is executed.

A process will have at least one thread, called the main thread. Any additional threads that we create within the process will belong to that process.

The Python process will terminate once all (non background threads) are terminated.

- Process: An instance of the Python interpreter has at least one thread called the MainThread.

- Thread: A thread of execution within a Python process, such as the MainThread or a new thread.

Next, let’s take a look at threads in Python.

Life-Cycle of a Thread

A thread in Python is represented as an instance of the threading.Thread class.

Once a thread is started, the Python interpreter will interface with the underlying operating system and request that a new native thread be created. The threading.Thread instance then provides a Python-based reference to this underlying native thread.

Each thread follows the same life-cycle. Understanding the stages of this life-cycle can help when getting started with concurrent programming in Python.

For example:

- The difference between creating and starting a thread.

- The difference between run and start.

- The difference between blocked and terminated

And so on.

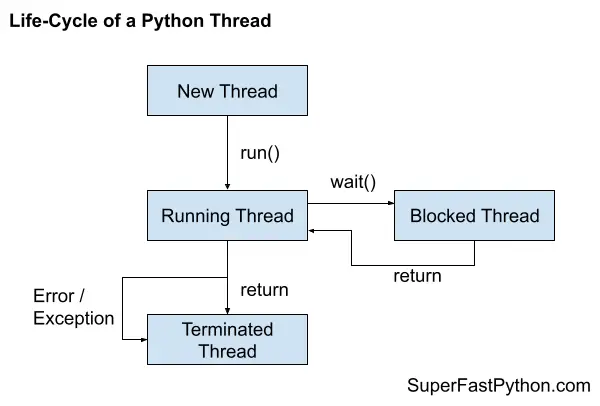

A Python thread may progress through three steps of its life-cycle: a new thread, a running thread, and a terminated thread.

While running, the thread may be executing code or may be blocked, waiting on something such as another thread or an external resource. Although, not all threads may block, it is optional based on the specific use case for the new thread.

- New Thread.

- Running Thread.

- Blocked Thread (optional).

- Terminated Thread.

A new thread is a thread that has been constructed by creating an instance of the threading.Thread class.

A new thread can transition to a running thread by calling the start() function.

A running thread may block in many ways, such as reading or writing from a file or a socket or by waiting on a concurrency primitive such as a semaphore or a lock. After blocking, the thread will run again.

Finally, a thread may terminate once it has finished executing its code or by raising an error or exception.

The following figure summarizes the states of the thread life-cycle and how the thread may transition through these states.

You can learn more about the thread life-cycle in this tutorial:

Next, let’s look at how to run a function in a new thread.

Run loops using all CPUs, download your FREE book to learn how.

Run a Function in a Thread

Python functions can be executed in a separate thread using the threading.Thread class.

In this section we will look at a few examples of how to run functions in another thread.

How to Run a Function In a Thread

To run a function in another thread:

- Create an instance of the threading.Thread class.

- Specify the name of the function via the “target” argument.

- Call the start() function.

The function executed in another thread may have arguments in which case they can be specified as a tuple and passed to the “args” argument of the threading.Thread class constructor or as a dictionary to the “kwargs” argument.

The start() function will return immediately and the operating system will execute the function in a separate thread as soon as it is able.

We do not have control over when the thread will execute precisely or which CPU core will execute it. Both of these are low-level responsibilities that are handled by the underlying operating system.

Next, let’s look at a worked example.

Example of Running a Function in a Thread

First, we can define a custom function that will be executed in another thread.

We will define a simple function that blocks for a moment then prints a statement.

The function can have any name we like, in this case we’ll name it task().

|

1 2 3 4 5 6 |

# a custom function that blocks for a moment def task(): # block for a moment sleep(1) # display a message print('This is from another thread') |

Next, we can create an instance of the threading.Thread class and specify our function name as the “target” argument in the constructor.

|

1 2 3 |

... # create a thread thread = Thread(target=task) |

Once created we can run the thread which will execute our custom function in a new native thread, as soon as the operating system can.

|

1 2 3 |

... # run the thread thread.start() |

The start() function does not block, meaning it returns immediately.

We can explicitly wait for the new thread to finish executing by calling the join() function. This is not needed as the main thread will not exit until the new thread has completed.

|

1 2 3 4 |

... # wait for the thread to finish print('Waiting for the thread...') thread.join() |

You can learn more about joining a thread in this tutorial:

Tying this together, the complete example of executing a function in another thread is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

# SuperFastPython.com # example of running a function in another thread from time import sleep from threading import Thread # a custom function that blocks for a moment def task(): # block for a moment sleep(1) # display a message print('This is from another thread') # create a thread thread = Thread(target=task) # run the thread thread.start() # wait for the thread to finish print('Waiting for the thread...') thread.join() |

Running the example first creates the threading.Thread then calls the start() function. This does not start the thread immediately, but instead allows the operating system to schedule the function to execute as soon as possible.

The main thread then prints a message waiting for the thread to complete, then calls the join() function to explicitly block and wait for the new thread to finish executing.

Once the custom function returns, the thread is closed. The join() function then returns and the main thread exists.

|

1 2 |

Waiting for the thread... This is from another thread |

Next, let’s look at how we can run a function that takes arguments in a new thread.

Example of Running a Function in a Thread With Arguments

We can execute functions in another thread that take arguments.

This can be demonstrated by first updating our task() function from the previous section to take two arguments, one for the time in seconds to block and the second for a message to display.

|

1 2 3 4 5 6 |

# a custom function that blocks for a moment def task(sleep_time, message): # block for a moment sleep(sleep_time) # display a message print(message) |

Finally, we can update the call to the threading.Thread constructor to specify the two arguments in order that the function expects them as a tuple via the “args” argument.

|

1 2 3 |

... # create a thread thread = Thread(target=task, args=(1.5, 'New message from another thread')) |

Tying this together, the complete example of executing a custom function that takes arguments in a separate thread is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

# SuperFastPython.com # example of running a function with arguments in another thread from time import sleep from threading import Thread # a custom function that blocks for a moment def task(sleep_time, message): # block for a moment sleep(sleep_time) # display a message print(message) # create a thread thread = Thread(target=task, args=(1.5, 'New message from another thread')) # run the thread thread.start() # wait for the thread to finish print('Waiting for the thread...') thread.join() |

Running the example creates the thread specifying the function name and the arguments to the function.

The thread is started and the function blocks for the parameterized number of seconds and prints the parameterized message.

|

1 2 |

Waiting for the thread... New message from another thread |

We now know how to execute functions in another thread via the “target” argument.

You can learn more about running functions in new threads in this tutorial:

Next let’s look at how we might run a function in another thread by extending the threading.Thread class.

Extend the Thread Class

We can also execute functions in another thread by extending the threading.Thread class and overriding the run() function.

In this section we will look at some examples of extending the threading.Thread class.

How to Extend the Thread Class

The threading.Thread class can be extended to run code in another thread.

This can be achieved by first extending the class, just like any other Python class.

For example:

|

1 2 3 |

# custom thread class class CustomThread(Thread): # ... |

Then the run() function of the threading.Thread class must be overridden to contain the code that you wish to execute in another thread.

For example:

|

1 2 3 |

# override the run function def run(self): # ... |

And that’s it.

Given that it is a custom class, you can define a constructor for the class and use it to pass in data that may be needed in the run() function, stored as instance variables (attributes).

You can also define additional functions on the class to split up the work you may need to complete in another thread.

Finally, attributes can also be used to store the results of any calculation or IO performed in another thread that may need to be retrieved afterward.

Next, let’s look at a worked example of extending the threading.Thread class.

Example of Extending the Thread Class

First, we can define a class that ends the threading.Thread class.

We will name the class something arbitrary such as “CustomThread“

|

1 2 3 |

# custom thread class class CustomThread(Thread): # ... |

We can then override the run() instance method and define the code that we wish to execute in another thread.

In this case, we will block for a moment and then print a message.

|

1 2 3 4 5 6 |

# override the run function def run(self): # block for a moment sleep(1) # display a message print('This is coming from another thread') |

Next, we can create an instance of our CustomThread class and call the start() function to begin executing our run() function in another thread. Internally, the start() function will call the run() function.

The code will then run in a new thread as soon as the operating system can schedule it.

|

1 2 3 4 5 |

... # create the thread thread = CustomThread() # start the thread thread.start() |

Finally, we wait for the new thread to finish executing.

|

1 2 3 4 |

... # wait for the thread to finish print('Waiting for the thread to finish') thread.join() |

Tying this together, the complete example of executing code in another thread by extending the threading.Thread class is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

# SuperFastPython.com # example of extending the Thread class from time import sleep from threading import Thread # custom thread class class CustomThread(Thread): # override the run function def run(self): # block for a moment sleep(1) # display a message print('This is coming from another thread') # create the thread thread = CustomThread() # start the thread thread.start() # wait for the thread to finish print('Waiting for the thread to finish') thread.join() |

Running the example first creates an instance of the thread, then executes the content of the run() function.

Meanwhile, the main thread waits for the new thread to finish its execution, before exiting.

|

1 2 |

Waiting for the thread to finish This is coming from another thread |

You now know how to run code in a new thread by extending the threading.Thread class.

You can learn more about extending the threading.Thread class in this tutorial:

Next, let’s look at how we might return values from a new thread.

Example of Extending the Thread Class and Returning Values

We may need to retrieve data from the thread, such as a return value.

There is no way for the run() function to return a value to the start() function and back to the caller.

Instead, we can return values from our run() function by storing them as instance variables and having the caller retrieve the data from those instance variables.

Let’s demonstrate this with an example. We can update the previous example above to indirectly return a value from the extended threading.Thread class.

First, we can update the run() function to store some data as an instance variable (also called a Python attribute).

|

1 2 3 |

... # store return value self.value = 99 |

The updated version of the extended threading.Thread class with this change is listed below.

|

1 2 3 4 5 6 7 8 9 10 |

# custom thread class class CustomThread(Thread): # override the run function def run(self): # block for a moment sleep(1) # display a message print('This is coming from another thread') # store return value self.value = 99 |

Next, we can retrieve the “returned” (stored) value from the run function in the main thread.

This can be achieved by accessing the attribute directly.

|

1 2 3 4 |

... # get the value returned from run value = thread.value print(f'Got: {value}') |

Tying this together, the complete example of returning a value from another thread indirectly in an extended threading.Thread class is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

# SuperFastPython.com # example of extending the Thread class and return values from time import sleep from threading import Thread # custom thread class class CustomThread(Thread): # override the run function def run(self): # block for a moment sleep(1) # display a message print('This is coming from another thread') # store return value self.value = 99 # create the thread thread = CustomThread() # start the thread thread.start() # wait for the thread to finish print('Waiting for the thread to finish') thread.join() # get the value returned from run value = thread.value print(f'Got: {value}') |

Running the example creates and executes the new thread as per normal.

The run() function stores a return value as an instance variable, that is then accessed and reported by the main thread after the new thread has finished executing.

|

1 2 3 |

Waiting for the thread to finish This is coming from another thread Got: 99 |

You can learn more about returning values from a thread in this tutorial:

Next, let’s look at some properties of thread instances.

Free Python Threading Course

Download your FREE threading PDF cheat sheet and get BONUS access to my free 7-day crash course on the threading API.

Discover how to use the Python threading module including how to create and start new threads and how to use a mutex locks and semaphores

Thread Instance Attributes

An instance of the Thread class provides a handle of a thread of execution.

As such, it provides attributes that we can use to query properties and the status of the underlying thread.

Let’s look at some examples.

Query Thread Name

Each thread has a name.

Threads are named automatically in a somewhat unique manner within each process with the form “Thread-%d” where %d is the integer indicating the thread number within the process, e.g. Thread-1 for the first thread created.

We can access the name of a thread via the “name” attribute, for example:

|

1 2 3 |

... # report the thread name print(thread.name) |

The example below creates an instance of the threading.Thread class and reports the default name of the thread.

|

1 2 3 4 5 6 7 |

# SuperFastPython.com # example of accessing the thread name from threading import Thread # create the thread thread = Thread() # report the thread name print(thread.name) |

Running the example creates the thread and reports the default name assigned to the thread within the process.

|

1 |

Thread-1 |

Query Thread Daemon

A thread may be a daemon thread.

Daemon threads is the name given to background threads. By default, threads are non-daemon threads.

A Python program will only exit when all non-daemon threads have finished exiting. For example, the main thread is a non-daemon thread. This means that daemon threads can run in the background and do not have to finish or be explicitly excited for the program to end.

We can determine if a thread is a daemon thread via the “daemon” attribute.

|

1 2 3 |

... # report the daemon attribute print(thread.daemon) |

The example creates an instance of the threading.Thread class and reports whether the thread is a daemon or not.

|

1 2 3 4 5 6 7 |

# SuperFastPython.com # example of assessing whether a thread is a daemon from threading import Thread # create the thread thread = Thread() # report the daemon attribute print(thread.daemon) |

Running the example reports that the thread is not a daemon thread, the default for new threads.

|

1 |

False |

Query Thread Identifier

Each thread has a unique identifier (id) within a Python process, assigned by the Python interpreter.

The identifier is a read-only positive integer value and is assigned only after the thread has been started.

We can access the identifier assigned to a threading.Thread instance via the “ident” property.

|

1 2 3 |

... # report the thread identifier print(thread.ident) |

The example below creates a threading.Thread instance and reports the assigned identifier.

|

1 2 3 4 5 6 7 8 9 10 11 |

# SuperFastPython.com # example of reporting the thread identifier from threading import Thread # create the thread thread = Thread() # report the thread identifier print(thread.ident) # start the thread thread.start() # report the thread identifier print(thread.ident) |

Running the example creates the thread instance then confirms that it does not have an identifier before it is started. The thread is started and its unique identifier is reported.

Note, your identifier will differ as the thread will have a different identifier each time the code is run.

|

1 2 |

None 123145502363648 |

Query Thread Native Identifier

Each thread has a unique identifier assigned by the operating system.

Python threads are real native threads, meaning that each thread we create is actually created and managed (scheduled) by the underlying operating system. As such, the operating system will assign a unique integer to each thread that is created on the system (across processes).

The native thread identifier can be accessed via the “native_id” property and is assigned after the thread has been started.

|

1 2 3 |

... # report the native thread identifier print(thread.native_id) |

The example below creates an instance of a threading.Thread and reports the assigned native thread id.

|

1 2 3 4 5 6 7 8 9 10 11 |

# SuperFastPython.com # example of reporting the native thread identifier from threading import Thread # create the thread thread = Thread() # report the native thread identifier print(thread.native_id) # start the thread thread.start() # report the native thread identifier print(thread.native_id) |

Running the example first creates the thread and confirms that it does not have a native thread identifier before it was started.

The thread is then started and the assigned native identifier is reported.

Note, your native thread identifier will differ as the thread will have a different identifier each time the code is run.

|

1 2 |

None 3061545 |

Query Thread Alive

A thread instance can be alive or dead.

An alive thread means that the run() method of the threading.Thread instance is currently executing.

This means that before the start() method is called and after the run() method has completed, the thread will not be alive.

We can check if a Thread is alive via the is_alive() method.

|

1 2 3 |

... # report the thread is alive print(thread.is_alive()) |

The example below creates a threading.Thread instance then checks whether it is alive.

|

1 2 3 4 5 6 7 |

# SuperFastPython.com # example of assessing whether a thread is alive from threading import Thread # create the thread thread = Thread() # report the thread is alive print(thread.is_alive()) |

Running the example creates a new Thread instance then reports that the thread is not alive.

False

We can update the example so that the thread sleeps for a moment and then report the alive status of the thread while it is running.

This can be achieved by setting the “target” argument to the threading.Thread to a lambda anonymous function that calls the time.sleep() function.

|

1 2 3 |

... # create the thread thread = Thread(target=lambda:sleep(1)) |

We can then start the thread by calling the start() function and report the alive status of the thread while it is running.

|

1 2 3 4 5 |

... # start the thread thread.start() # report the thread is alive print(thread.is_alive()) |

Finally, we can wait for the thread to finish and report the status of the thread afterward.

|

1 2 3 4 5 |

... # wait for the thread to finish thread.join() # report the thread is alive print(thread.is_alive()) |

Tying this together, the complete example of checking the alive status of a thread while it is running is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# SuperFastPython.com # example of assessing whether a running thread is alive from time import sleep from threading import Thread # create the thread thread = Thread(target=lambda:sleep(1)) # report the thread is alive print(thread.is_alive()) # start the thread thread.start() # report the thread is alive print(thread.is_alive()) # wait for the thread to finish thread.join() # report the thread is alive print(thread.is_alive()) |

Running the example creates the thread instance and confirms that it is not alive before the start() function has been called.

The thread is then started and blocked for a second, meanwhile we check the alive status in the main thread which is reported as True, the thread is alive.

The thread finishes and the alive status is reported again, showing that indeed the thread is no longer alive.

|

1 2 3 |

False True False |

Now that we are familiar with some attributes of a threading.Thread instance, let’s look at how we might configure a Thread.

Overwhelmed by the python concurrency APIs?

Find relief, download my FREE Python Concurrency Mind Maps

Configure Threads

Instances of the threading.Thread class can be configured.

There are two properties of a thread that can be configured, they are the name of the thread and whether the thread is a daemon or not.

Let’s take a closer look at each.

How to Configure Thread Name

Threads can be assigned custom names.

The name of a Thread can be set via the “name” argument in the threading.Thread constructor.

For example:

|

1 2 3 |

... # create a thread with a custom name thread = Thread(name='MyThread') |

The example below demonstrates how to set the name of a thread in the threading.Thread constructor.

|

1 2 3 4 5 6 7 |

# SuperFastPython.com # example of setting the thread name in the constructor from threading import Thread # create a thread with a custom name thread = Thread(name='MyThread') # report thread name print(thread.name) |

Running the example creates the thread with the custom name then reports the name of the thread.

|

1 |

MyThread |

The name of the thread can also be set via the “name” property.

For example:

|

1 2 3 |

... # set the name thread.name = 'MyThread' |

The example below demonstrates this by creating an instance of a thread and then setting the name via the property.

|

1 2 3 4 5 6 7 8 9 |

# SuperFastPython.com # example of setting the thread name via the property from threading import Thread # create a thread thread = Thread() # set the name thread.name = 'MyThread' # report thread name print(thread.name) |

Running the example creates the thread and sets the name then reports that the new name was assigned correctly.

|

1 |

MyThread |

You can learn more about how to configure the thread name in this tutorial:

Next, let’s take a closer look at daemon threads.

How to Configure Thread Daemon

A daemon thread is a background thread.

Daemon is pronounced “dee-mon“, like the alternate spelling “demon“.

The ideas is that backgrounds are like “daemons” or spirits (from the ancient Greek) that do tasks for you in the background. You might also refer to daemon threads as daemonic.

A thread may be configured to be a daemon or not, and most threads in concurrent programming, including the main thread, are non-daemon threads (not background threads) by default.

A thread can be configured to be a daemon by setting the “daemon” argument to True in the threading.Thread constructor.

For example:

|

1 2 3 |

... # create a daemon thread thread = Thread(daemon=True) |

The example below shows how to create a new daemon thread.

|

1 2 3 4 5 6 7 |

# SuperFastPython.com # example of setting a thread to be a daemon via the constructor from threading import Thread # create a daemon thread thread = Thread(daemon=True) # report if the thread is a daemon print(thread.daemon) |

Running the example creates a new thread and configures it to be a daemon thread via the constructor.

|

1 |

True |

We can also configure a thread to be a daemon thread after it has been constructed via the “daemon” property.

For example:

|

1 2 3 |

... # configure the thread to be a daemon thread.daemon = True |

The example below creates a new threading.Thread instance then configures it to be a daemon thread via the property.

|

1 2 3 4 5 6 7 8 9 |

# SuperFastPython.com # example of setting a thread to be a daemon via the property from threading import Thread # create a thread thread = Thread() # configure the thread to be a daemon thread.daemon = True # report if the thread is a daemon print(thread.daemon) |

Running the example creates a new thread instance then configures it to be a daemon thread, then reports the daemon status.

|

1 |

True |

You can learn more about daemon threads in this tutorial:

Now that we are familiar with how to configure new threads, let’s take a closer look at the built-in main thread.

Main Thread

Each Python process is created with one default thread called the “main thread“.

In this section we will take a closer look at the main thread.

When you execute a Python program, it is executing in the main thread.

The main thread is the default thread created for each Python process.

In normal conditions, the main thread is the thread from which the Python interpreter was started.

— threading — Thread-based parallelism

The main thread in each Python process always has the name “MainThread” and is not a daemon thread.

Once the main thread exits, the Python process will exit, assuming there are no other non-daemon threads running.

There is a “main thread” object; this corresponds to the initial thread of control in the Python program. It is not a daemon thread.

— threading — Thread-based parallelism

We can acquire a threading.Thread instance that represents the main thread by calling the threading.current_thread() function from within the main thread.

For example:

|

1 2 3 |

... # get the main thread thread = current_thread() |

The example below demonstrates this and reports properties of the main thread.

|

1 2 3 4 5 6 7 |

# SuperFastPython.com # example of getting the current thread for the main thread from threading import current_thread # get the main thread thread = current_thread() # report properties for the main thread print(f'name={thread.name}, daemon={thread.daemon}, id={thread.ident}') |

Running the example retrieves a threading.Thread instance for the current thread which is the main thread, then reports the details.

Note, your main thread will have a different identifier each time the program is run.

|

1 |

name=MainThread, daemon=False, id=4386115072 |

We can also get a threading.Thread instance for the main thread from any thread by calling the threading.main_thread() function.

For example:

|

1 2 3 |

... # get the main thread thread = main_thread() |

The example below demonstrates this and reports the properties of the main thread

|

1 2 3 4 5 6 7 |

# SuperFastPython.com # example of getting the main thread from threading import main_thread # get the main thread thread = main_thread() # report properties for the main thread print(f'name={thread.name}, daemon={thread.daemon}, id={thread.ident}') |

Running the example acquires the threading.Thread instance that represents the main thread and reports the thread properties.

Note, your main thread will have a different identifier each time the program is run.

|

1 |

name=MainThread, daemon=False, id=4644216320 |

You can learn more about the main thread in this tutorial:

Now that we are familiar with the main thread, let’s look at some additional threading utilities.

Thread Utilities

There are a number of utilities we can use when working with threads within a Python process.

These utilities are provided as “threading” module functions.

We have already seen two of these functions in the previous section, specifically threading.current_thread() and threading.main_thread().

In this section we will review a number of additional utility functions.

Number of Active Threads

We can discover the number of active threads in a Python process.

This can be achieved via the threading.active_count() function that returns an integer that indicates the number threads that are “alive“.

|

1 2 3 |

... # get the number of active threads count = active_count() |

We can demonstrate this with a short example.

|

1 2 3 4 5 6 7 |

# SuperFastPython.com # report the number of active threads from threading import active_count # get the number of active threads count = active_count() # report the number of active threads print(count) |

Running the example reports the number of active threads in the process.

Given that there is only a single thread, the main thread, the count is one.

|

1 |

1 |

Current Thread

We can get a threading.Thread instance for the thread running the current code.

This can be achieved via the threading.current_thread() function that returns a threading.Thread instance.

|

1 2 3 |

... # get the current thread thread = current_thread() |

We can demonstrate this with a short example.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

# SuperFastPython.com # retrieve the current thread within from threading import Thread from threading import current_thread # function to get the current thread def task(): # get the current thread thread = current_thread() # report the name print(thread.name) # create a thread thread = Thread(target=task) # start the thread thread.start() # wait for the thread to exit thread.join() |

Running the example first creates a new threading.Thread to run a custom function.

The custom function acquires a threading.Thread instance for the currently running thread and uses it to report the name of the currently running thread.

|

1 |

Thread-1 |

Thread Identifier

We can get the Python thread identifier for the current thread.

This can be achieved via the threading.get_ident() function.

|

1 2 3 |

... # get the id for the current thread identifier = get_ident() |

Recall that each thread within a Python process has a unique identifier assigned by the interpreter.

We can demonstrate this with a short example.

|

1 2 3 4 5 6 7 |

# SuperFastPython.com # report the id for the current thread from threading import get_ident # get the id for the current thread identifier = get_ident() # report the thread id print(identifier) |

Running the example gets and reports the identifier of the currently running thread.

Note, your identifier will differ as a new identifier is assigned to a thread each time the code is run.

|

1 |

4662242816 |

Native Thread Identifier

We can get the native thread identifier for the current thread assigned by the operating system.

This can be achieved via the threading.get_native_id() function.

|

1 2 3 |

... # get the native id for the current thread identifier = get_native_id() |

Recall that each thread within a Python process has a unique native identifier assigned by the operating system.

We can demonstrate this with a short example.

|

1 2 3 4 5 6 7 |

# SuperFastPython.com # report the native id for the current thread from threading import get_native_id # get the native id for the current thread identifier = get_native_id() # report the thread id print(identifier) |

Running the example gets and reports the native identifier of the currently running thread.

Note, your native identifier will differ as a new identifier is assigned to a thread each time the code is run.

|

1 |

374772 |

Enumerate Active Threads

We can get a list of all active threads within a Python process.

This can be achieved via the threading.enumerate() function.

Only those threads that are “alive” will be included in the list, meaning those threads that are currently executing their run() function.

The list will always include the main thread.

We can demonstrate this with a short example.

|

1 2 3 4 5 6 7 8 |

# SuperFastPython.com # enumerate all active threads import threading # get a list of all active threads threads = threading.enumerate() # report the name of all active threads for thread in threads: print(thread.name) |

Running the example first retrieves a list of all active threads within the Python process.

The list of active threads is then iterated, reporting the name of each thread. In this case, the list only includes the main thread.

|

1 |

MainThread |

Thread Exception Handling

We can manage unhandled exceptions that are raised within threads.

In this section, we will take a closer look at threading.Thread exception handling.

Unhandled Exception

An unhandled exception can occur in a new thread.

The effect will be that the thread will unwind and report the message on standard error.

Unwinding the thread means that the thread will stop executing at the point of the exception (or error) and that the exception will bubble up the stack in the thread until it reaches the top level, e.g. the run() function.

We can demonstrate this with an example.

Firstly, we can define a target function that will block for a moment then raise an exception.

|

1 2 3 4 5 6 |

# target function that raises an exception def work(): print('Working...') sleep(1) # rise an exception raise Exception('Something bad happened') |

Next, we can create a thread that will call the target function and wait for the function to complete.

|

1 2 3 4 5 6 7 8 9 |

... # create a thread thread = Thread(target=work) # run the thread thread.start() # wait for the thread to finish thread.join() # continue on print('Continuing on...') |

Tying this together, the complete example is listed below

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

# SuperFastPython.com # example of an unhandled exception in a thread from time import sleep from threading import Thread # target function that raises an exception def work(): print('Working...') sleep(1) # rise an exception raise Exception('Something bad happened') # create a thread thread = Thread(target=work) # run the thread thread.start() # wait for the thread to finish thread.join() # continue on print('Continuing on...') |

Running the exception creates the thread and starts executing it.

The thread blocks for a moment and raises an exception.

The exception bubbles up to the run() function and is then reported on standard error (on the terminal).

Importantly, the failure in the thread does not impact the main thread, which continues on executing.

|

1 2 3 4 5 6 7 8 |

Working... Exception in thread Thread-1: Traceback (most recent call last): ... exception_unhandled.py", line 11, in work raise Exception('Something bad happened') Exception: Something bad happened Continuing on... |

Exception Hook

We can specify how to handle unhandled errors and exceptions that occur within new threads via the exception hook.

By default, there is no exception hook, in which case the sys.excepthook function is called that reports the familiar message.

We can specify a custom exception hook function that will be called whenever a threading.Thread fails with an unhandled Error or Exception.

This can be achieved via the threading.excepthook function.

First, we must define a function that takes a single argument that will be an instance of the ExceptHookArgs class, containing details of the exception and thread.

|

1 2 3 |

# custom exception hook def custom_hook(args): # ... |

We can then specify the exception hook function to be called whenever an unhandled exception bubbles up to the top level of a thread.

|

1 2 3 |

... # set the exception hook threading.excepthook = custom_hook |

We can demonstrate this with an example by updating the unhandled exception in the previous section.

We can define a custom hook function that reports a single line to standard out.

|

1 2 3 4 |

# custom exception hook def custom_hook(args): # report the failure print(f'Thread failed: {args.exc_value}') |

The complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

# SuperFastPython.com # example of an unhandled exception in a thread from time import sleep import threading # target function that raises an exception def work(): print('Working...') sleep(1) # rise an exception raise Exception('Something bad happened') # custom exception hook def custom_hook(args): # report the failure print(f'Thread failed: {args.exc_value}') # set the exception hook threading.excepthook = custom_hook # create a thread thread = threading.Thread(target=work) # run the thread thread.start() # wait for the thread to finish thread.join() # continue on print('Continuing on...') |

Running the example creates and runs the thread.

The new thread blocks for a moment, then fails with an exception.

The exception bubbles up to the top level of the thread at which point the custom exception hook function is called. Our custom message is then reported.

The main thread then continues to execute as per normal.

This can be helpful if we want to perform special actions when a thread fails unexpectedly, such as log to a file.

|

1 2 3 |

Working... Thread failed: Something bad happened Continuing on... |

You can learn more about handling unexpected exceptions in threads in this tutorial:

Next, let’s look at the limitations of threads in Python.

Limitations of Threads in Python

The reference Python interpreter is referred to as CPython.

It is the free version of Python that you can download from python.org to develop and run Python programs.

The CPython Python interpreter generally does not permit more than one thread to run at a time.

This is achieved through a mutual exclusion (mutex) lock within the interpreter that ensures that only one thread at a time can execute Python bytecodes in the Python virtual machine.

In CPython, due to the Global Interpreter Lock, only one thread can execute Python code at once (even though certain performance-oriented libraries might overcome this limitation).

— THREADING — THREAD-BASED PARALLELISM

This lock is referred to as the Global Interpreter Lock or GIL for short.

In CPython, the global interpreter lock, or GIL, is a mutex that protects access to Python objects, preventing multiple threads from executing Python bytecodes at once. The GIL prevents race conditions and ensures thread safety.

— GLOBAL INTERPRETER LOCK, PYTHON WIKI.

This means that although we might write concurrent code with threads in Python and run our code on hardware with many CPU cores, we may not be able to execute our code in parallel.

There are some exceptions to this.

Specifically, the GIL is released by the Python interpreter sometimes to allow other threads to run.

Such as when the thread is blocked, such as performing IO with a socket or file, or often if the thread is executing computationally intensive code in a C library, like hashing bytes.

Luckily, many potentially blocking or long-running operations, such as I/O, image processing, and NumPy number crunching, happen outside the GIL. Therefore it is only in multithreaded programs that spend a lot of time inside the GIL, interpreting CPython bytecode, that the GIL becomes a bottleneck.

— GLOBAL INTERPRETER LOCK, PYTHON WIKI.

Therefore, although in most cases CPython will prevent parallel execution of threads, it is allowed in some circumstances. These circumstances represent the base use case for adopting threads in your Python programs.

Next, let’s look at specific cases when you should consider using threads.

When to Use a Thread

The reference Python interpreter CPython prevents more than one thread from executing bytecode at the same time.

This is achieved using a mutex called the Global Interpreter Lock or GIL, as we learned in the previous section.

There are times when the lock is released by the interpreter and we can achieve parallel execution of our concurrent code in Python.

Examples of when the lock is released include:

- When a thread is performing blocking IO.

- When a thread is executing C code and explicitly releases the lock.

There are also ways of avoiding the lock entirely, such has:

- Using a third-party Python interpreter to execute Python code.

Let’s take a look at each of these use cases in turn.

Use Threads for Blocking IO

You should use threads for IO-bound tasks.

An IO-bound task is a type of task that involves reading from or writing to a device, file, or socket connection.

The operations involve input and output (IO), and the speed of these operations is bound by the device, hard drive, or network connection. This is why these tasks are referred to as IO-bound.

CPUs are really fast. Modern CPUs, like a 4GHz CPU, can execute 4 billion instructions per second, and you likely have more than one CPU core in your system.

Doing IO is very slow compared to the speed of CPUs.

Interacting with devices, reading and writing files and socket connections involves calling instructions in your operating system (the kernel), which will wait for the operation to complete. If this operation is the main focus for your CPU, such as executing in the main thread of your Python program, then your CPU is going to wait many milliseconds or even many seconds doing nothing.

That is potentially billions of operations prevented from executing.

A thread performing an IO operation will block for the duration of the operation. While blocked, this signals to the operating system that a thread can be suspended and another thread can execute, called a context switch.

Additionally, the Python interpreter will release the GIL when performing blocking IO operations, allowing other threads within the Python process to execute.

Therefore, blocking IO provides an excellent use case for using threads in Python.

Examples of blocking IO operations include:

- Reading or writing a file from the hard drive.

- Reading or writing to standard output, input or error (stdin, stdout, stderr).

- Printing a document.

- Reading or writing bytes on a socket connection with a server.

- Downloading or uploading a file.

- Querying a server.

- Querying a database.

- Taking a photo or recording a video.

- And so much more.

Use Threads External C Code (that releases the GIL)

We may make function calls that themselves call down into a third-party C library.

Often these function calls will release the GIL as the C library being called will not interact with the Python interpreter.

This provides an opportunity for other threads in the Python process to run.

For example, when using the “hash” module in the Python standard library, the GIL is released when hashing the data via the hash.update() function.

The Python GIL is released to allow other threads to run while hash updates on data larger than 2047 bytes is taking place when using hash algorithms supplied by OpenSSL.

— HASHLIB — SECURE HASHES AND MESSAGE DIGESTS

Another example is the NumPy library for managing arrays of data which will release the GIL when performing functions on arrays.

The exceptions are few but important: while a thread is waiting for IO (for you to type something, say, or for something to come in the network) python releases the GIL so other threads can run. And, more importantly for us, while numpy is doing an array operation, python also releases the GIL.

— WRITE MULTITHREADED OR MULTIPROCESS CODE, SCIPY COOKBOOK.

Use Threads With (Some) Third-Party Python Interpreters

Another important consideration is the use of third-party Python interpreters.

There are alternate commercial and open source Python interpreters that you can acquire and use to execute your Python code.

Some of these interpreters may implement a GIL and release it more or less than CPython. Other interpreters remove the GIL entirely and allow multiple Python concurrent threads to execute in parallel.

Examples of third-party Python interpreters without a GIL include:

- Jython: an open source Python interpreter written in Java.

- IronPython: an open source Python interpreter written in .NET.

… Jython does not have the straightjacket of the GIL. This is because all Python threads are mapped to Java threads and use standard Java garbage collection support (the main reason for the GIL in CPython is because of the reference counting GC system). The important ramification here is that you can use threads for compute-intensive tasks that are written in Python.

— NO GLOBAL INTERPRETER LOCK, DEFINITIVE GUIDE TO JYTHON.

Next, let’s take a closer look at thread blocking function calls.

Thread Blocking Calls

A blocking call is a function call that does not return until it is complete.

All normal functions are blocking calls. No big deal.

In concurrent programming, blocking calls have special meaning.

Blocking calls are calls to functions that will wait for a specific condition and signal to the operating system that nothing interesting is going on while the thread is waiting.

The operating system may notice that a thread is making a blocking function call and decide to context switch to another thread.

You may recall that the operating system manages what threads should run and when to run them. It achieves this using a type of multitasking where a running thread is suspended and suspended thread is restored and continues running. This suspending and restoring of threads is called a context switch.

The operating system prefers to context switch away from blocked threads, allowing non-blocked threads to run.

This means if a thread makes a blocking function call, a call that waits, then it is likely to signal that the thread can be suspended and allow other threads to run.

Similarly, many function calls that we may traditionally think block may have non-blocking versions in modern non-blocking concurrency APIs, like asyncio.

There are three types of blocking function calls you need to consider in concurrent programming, they are:

- Blocking calls on concurrent primitives.

- Blocking calls for IO.

- Blocking calls to sleep.

Let’s take a closer look at each in turn.

Blocking Calls on Concurrency Primitives

There are many examples of blocking function calls in concurrent programming.

Common examples include:

- Waiting for a lock, e.g. calling acquire() on a threading.Lock.

- Waiting to be notified, e.g. calling wait() on a threading.Condition.

- Waiting for a thread to terminate, e.g. calling join() on a threading.Thread.

- Waiting for a semaphore, e.g. calling acquire() on a threading.Semaphore.

- Waiting for an event, e.g. calling wait() on a threading.Event.

- Waiting for a barrier, e.g. calling wait() on a threading.Barrier.

Blocking on concurrency primitives is a normal part of concurrency programming.

Blocking Calls for I/O

Conventionally, function calls that interact with IO are often blocking function calls.

That is, they are blocking in the same sense as blocking calls in the concurrency primitives.

The wait for the IO device to respond is another signal to the operating system that the thread can be context switched.

Common examples include:

- Hard disk drive: Reading, writing, appending, renaming, deleting, etc. files.

- Peripherals: mouse, keyboard, screen, printer, serial, camera, etc.

- Internet: Downloading and uploading files, getting a webpage, querying RSS, etc.

- Database: Select, update, delete, etc. SQL queries.

- Email: Send mail, receive mail, query inbox, etc.

And many more examples, mostly related to sockets.

Performing IO with devices is typically very slow compared to the CPU.

The CPU can perform orders of magnitude more instructions compared to reading or writing some bytes to a file or socket.

The IO with devices is coordinated by the operating system and the device. This means the operating system can gather or send some bytes from or to the device then context switch back to the blocking thread when needed, allowing the function call to progress.

As such, you will often see comments about blocking calls when working with IO.

Blocking Calls to Sleep

The sleep() function is a capability provided by the underlying operating system that we can make use of within our program.

It is a blocking function call that pauses the thread to block for a fixed time in seconds.

This can be achieved via a call to time.sleep().

For example:

|

1 2 3 |

... # sleep for 5 seconds time.sleep(5) |

It is a blocking call in the same sense as concurrency primitives and blocking IO function calls. It signals to the operating system that the thread is waiting and is a good candidate for a context switch.

Sleeps are often used when timing is important in an application.

In concurrent programming, adding a sleep can be a useful way to simulate computational effort by a thread for a fixed interval.

We often use sleeps in worked examples when demonstrating concurrency programming, but adding sleeps to code can also aid in unit testing and debugging concurrency failure conditions, such as race conditions by forcing mistiming of events within a dynamic application.

You can learn more about blocking calls in the tutorial:

Next, let’s take a look at thread-local data storage.

Thread-Local Data

Threads can store local data via an instance of the threading.local class.

First, an instance of the local class must be created.

|

1 2 3 |

... # create a local instance local = threading.local() |

Then data can be stored on the local instance with properties of any arbitrary name.

For example:

|

1 2 3 |

... # store some data local.custom = 33 |

Importantly, other threads can use the same property names on the local but the values will be limited to each thread.

This is like a namespace limited to each thread and is called “thread-local data”. It means that threads cannot access or read the local data of other threads.

Importantly, each thread must hang on to the “local” instance in order to access the stored data.

We can demonstrate this with an example.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

# SuperFastPython.com # example of thread local storage from time import sleep import threading # custom target function def task(value): # create a local storage local = threading.local() # store data local.value = value # block for a moment sleep(value) # retrieve value print(f'Stored value: {local.value}') # create and start a thread threading.Thread(target=task, args=(1,)).start() # create and start another thread threading.Thread(target=task, args=(2,)).start() |

Running the example first creates one thread that stores the value “1” against the property “value”, then blocks for one second.

The second thread runs and stores the value “2” against the property “value”, then blocks for two seconds.

Each thread then reports their “value” from thread-local storage, matching the value that was stored within each thread-local context.

If the threads used the same variable for storage, then the both threads would report “2” for the “value” property, which is not the case because the “value” property is unique to each thread.

|

1 2 |

Stored value: 1 Stored value: 2 |

You can learn more about thread local storage in this tutorial:

Now that we are familiar with thread-local storage, let’s look at a mutual exclusion lock.

Thread Mutex Lock

A mutex lock is used to protect critical sections of code from concurrent execution.

You can use a mutual exclusion (mutex) lock in Python via the threading.Lock class.

What is a Mutual Exclusion Lock

A mutual exclusion lock or mutex lock is a synchronization primitive intended to prevent a race condition.

A race condition is a concurrency failure case when two threads run the same code and access or update the same resource (e.g. data variables, stream, etc.) leaving the resource in an unknown and inconsistent state.

Race conditions often result in unexpected behavior of a program and/or corrupt data.

These sensitive parts of code that can be executed by multiple threads concurrently and may result in race conditions are called critical sections. A critical section may refer to a single block of code, but it also refers to multiple accesses to the same data variable or resource from multiple functions.

A mutex lock can be used to ensure that only one thread at a time executes a critical section of code at a time, while all other threads trying to execute the same code must wait until the currently executing thread is finished with the critical section and releases the lock.

Each thread must attempt to acquire the lock at the beginning of the critical section. If the lock has not been obtained, then a thread will acquire it and other threads must wait until the thread that acquired the lock releases it.

If the lock has not been acquired, we might refer to it as being in the “unlocked” state. Whereas if the lock has been acquired, we might refer to it as being in the “locked” state.

- Unlocked: The lock has not been acquired and can be acquired by the next thread that makes an attempt.

- Locked: The lock has been acquired by one thread and any thread that makes an attempt to acquire it must wait until it is released.

Locks are created in the unlocked state.

Now that we know what a mutex lock is, let’s take a look at how we can use it in Python.

How to Use a Mutex Lock

Python provides a mutual exclusion lock via the threading.Lock class.

An instance of the lock can be created and then acquired by threads before accessing a critical section, and released after the critical section.

For example:

|

1 2 3 4 5 6 7 8 |

... # create a lock lock = Lock() # acquire the lock lock.acquire() # ... # release the lock lock.release() |

Only one thread can have the lock at any time. If a thread does not release an acquired lock, it cannot be acquired again.

The thread attempting to acquire the lock will block until the lock is acquired, such as if another thread currently holds the lock then releases it.

We can attempt to acquire the lock without blocking by setting the “blocking” argument to False. If the lock cannot be acquired, a value of False is returned.

|

1 2 3 |

... # acquire the lock without blocking lock.acquire(blocking=false) |

We can also attempt to acquire the lock with a timeout, that will wait the set number of seconds to acquire the lock before giving up. If the lock cannot be acquired, a value of False is returned.

|

1 2 3 |

... # acquire the lock with a timeout lock.acquire(timeout=10) |

We can also use the lock via the context manager protocol via the with statement, allowing the critical section to be a block within the usage of the lock.

For example:

|

1 2 3 4 5 6 |

... # create a lock lock = Lock() # acquire the lock with lock: # ... |

This is the preferred usage as it makes it clear where the protected code begins and ends, and ensures that the lock is always released, even if there is an exception or error within the critical section.

We can also check if the lock is currently acquired by a thread via the locked() function.

|

1 2 3 4 |

... # check if a lock is currently acquired if lock.locked(): # ... |

Next, let’s look at a worked example of using the threading.Lock class.

Example of Using a Mutex Lock

We can develop an example to demonstrate how to use the mutex lock.

First, we can define a target task function that takes a lock as an argument and uses the lock to protect a critical section. In this case, the critical section involves reporting a message and blocking for a fraction of a section.

|

1 2 3 4 5 6 |

# work function def task(lock, identifier, value): # acquire the lock with lock: print(f'>thread {identifier} got the lock, sleeping for {value}') sleep(value) |

We can then create one instance of the threading.Lock shared among the threads, and pass it to each thread that we intend to execute the target task function.

|

1 2 3 4 5 6 7 |

... # create a shared lock lock = Lock() # start a few threads that attempt to execute the same critical section for i in range(10): # start a thread Thread(target=task, args=(lock, i, random())).start() |

Tying this together, the complete example of using a lock is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

# SuperFastPython.com # example of a mutual exclusion (mutex) lock from time import sleep from random import random from threading import Thread from threading import Lock # work function def task(lock, identifier, value): # acquire the lock with lock: print(f'>thread {identifier} got the lock, sleeping for {value}') sleep(value) # create a shared lock lock = Lock() # start a few threads that attempt to execute the same critical section for i in range(10): # start a thread Thread(target=task, args=(lock, i, random())).start() # wait for all threads to finish... |

Running the example starts ten threads that all execute a custom target function.

Each thread attempts to acquire the lock, and once they do, they report a message including their id and how long they will sleep before releasing the lock.

Your specific results may vary given the use of random numbers.

|

1 2 3 4 5 6 7 8 9 10 |

>thread 0 got the lock, sleeping for 0.8859193801237439 >thread 1 got the lock, sleeping for 0.02868415293867832 >thread 2 got the lock, sleeping for 0.04469783674319383 >thread 3 got the lock, sleeping for 0.20456291750962474 >thread 4 got the lock, sleeping for 0.3689208984892195 >thread 5 got the lock, sleeping for 0.21105944750222927 >thread 6 got the lock, sleeping for 0.052093068060339864 >thread 7 got the lock, sleeping for 0.871251970586552 >thread 8 got the lock, sleeping for 0.932718580790764 >thread 9 got the lock, sleeping for 0.9514093969897454 |

You can learn more about thread mutex locks in this tutorial:

Now that we are familiar with the lock, let’s take a look at the reentrant lock.

Thread Reentrant Lock

A reentrant lock is a lock that can be acquired more than once by the same thread.

You can use reentrant locks in Python via the threading.RLock class.

What is a Reentrant Lock

A reentrant mutual exclusion lock, “reentrant mutex” or “reentrant lock” for short, is like a mutex lock except it allows a thread to acquire the lock more than once.

A reentrant lock is a synchronization primitive that may be acquired multiple times by the same thread. […] In the locked state, some thread owns the lock; in the unlocked state, no thread owns it.

— RLOCK OBJECTS, THREADING – THREAD-BASED PARALLELISM.

A thread may need to acquire the same lock more than once for many reasons.

We can imagine critical sections spread across a number of functions, each protected by the same lock. A thread may call across these functions in the course of normal execution and may call into one critical section from another critical section.

A limitation of a (non-reentrant) mutex lock is that if a thread has acquired the lock that it cannot acquire it again. In fact, this situation will result in a deadlock as it will wait forever for the lock to be released so that it can be acquired, but it holds the lock and will not release it.

A reentrant lock will allow a thread to acquire the same lock again if it has already acquired it. This allows the thread to execute critical sections from within critical sections, as long as they are protected by the same reentrant lock.

Each time a thread acquires the lock it must also release it, meaning that there are recursive levels of acquire and release for the owning thread. As such, this type of lock is sometimes called a “recursive mutex lock“.

Now that we are familiar with the reentrant lock, let’s take a closer look at the difference between a lock and a reentrant lock in Python.

How to Use the Reentrant Lock

Python provides a lock via the threading.RLock class.

An instance of the RLock can be created and then acquired by threads before accessing a critical section, and released after the critical section.

For example:

|

1 2 3 4 5 6 7 8 |

... # create a reentrant lock lock = RLock() # acquire the lock lock.acquire() # ... # release the lock lock.release() |

The thread attempting to acquire the lock will block until the lock is acquired, such as if another thread currently holds the lock (once or more than once) then releases it.

We can attempt to acquire the lock without blocking by setting the “blocking” argument to False. If the lock cannot be acquired, a value of False is returned.

|

1 2 3 |

... # acquire the lock without blocking lock.acquire(blocking=false) |

We can also attempt to acquire the lock with a timeout, that will wait the set number of seconds to acquire the lock before giving up. If the lock cannot be acquired, a value of False is returned.

|

1 2 3 |

... # acquire the lock with a timeout lock.acquire(timeout=10) |

We can also use the reentrant lock via the context manager protocol via the with statement, allowing the critical section to be a block within the usage of the lock.

For example:

|

1 2 3 4 5 6 |

... # create a reentrant lock lock = RLock() # acquire the lock with lock: # ... |

Next, let’s look at a worked example of using the threading.RLock class.

Example of Using a Reentrant Lock

We can develop an example to demonstrate how to use the reentrant lock.

First, we can define a function to report that a thread is done that protects the reporting process with a lock.

|

1 2 3 4 5 |

# reporting function def report(lock, identifier): # acquire the lock with lock: print(f'>thread {identifier} done') |

Next, we can define a task function that reports a message, blocks for a moment, then calls the reporting function. All of the work is protected with the lock.

|

1 2 3 4 5 6 7 8 |

# work function def task(lock, identifier, value): # acquire the lock with lock: print(f'>thread {identifier} sleeping for {value}') sleep(value) # report report(lock, identifier) |

Given that the target task function is protected with a lock and calls the reporting function that is also protected by the same lock, we can use a reentrant lock so that if a thread acquires the lock in task(), it will be able to re-enter the lock in the report() function.

Finally, we can create the reentrant lock, then startup threads that execute the target task function.

|

1 2 3 4 5 6 7 8 |

... # create a shared reentrant lock lock = RLock() # start a few threads that attempt to execute the same critical section for i in range(10): # start a thread Thread(target=task, args=(lock, i, random())).start() # wait for all threads to finish... |

Tying this together, the complete example of demonstrating a reentrant lock is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

# SuperFastPython.com # example of a reentrant lock from time import sleep from random import random from threading import Thread from threading import RLock # reporting function def report(lock, identifier): # acquire the lock with lock: print(f'>thread {identifier} done') # work function def task(lock, identifier, value): # acquire the lock with lock: print(f'>thread {identifier} sleeping for {value}') sleep(value) # report report(lock, identifier) # create a shared reentrant lock lock = RLock() # start a few threads that attempt to execute the same critical section for i in range(10): # start a thread Thread(target=task, args=(lock, i, random())).start() # wait for all threads to finish... |

Running the example starts up ten threads that execute the target task function.

Only one thread can acquire the lock at a time, and then once acquired, blocks and then reenters the same lock again to report the done message.

If a non-reentrant lock, e.g. a threading.Lock was used instead, then the thread would block forever waiting for the lock to become available, which it can’t because the thread already holds the lock.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

>thread 0 sleeping for 0.5784837315288808 >thread 0 done >thread 1 sleeping for 0.19407032646041522 >thread 1 done >thread 2 sleeping for 0.03612750793398978 >thread 2 done >thread 3 sleeping for 0.17964358883204423 >thread 3 done >thread 4 sleeping for 0.2800897627049981 >thread 4 done >thread 5 sleeping for 0.9314520504231987 >thread 5 done >thread 6 sleeping for 0.04446466830667195 >thread 6 done >thread 7 sleeping for 0.37852383245813737 >thread 7 done >thread 8 sleeping for 0.3529410350492209 >thread 8 done >thread 9 sleeping for 0.7780184882739216 >thread 9 done |

You can learn more about reentrant locks in this tutorial:

Now that we are familiar with the reentrant lock, let’s look at the condition.

Thread Condition

A condition allows threads to wait and be notified.

You can use a thread condition object in Python via the threading.Condition class.

What is a Threading Condition

In concurrency, a condition (also called a monitor) allows multiple threads to be notified about some result.

It combines both a mutual exclusion lock (mutex) and a conditional variable.

A mutex allow can be used to protect a critical section, but it cannot be used to alert other threads that a condition has changed or been met.

A condition can be acquired by a thread (like a mutex) after which it can wait to be notified by another thread that something has changed. While waiting, the thread is blocked and releases the lock for other threads to acquire.

Another thread can then acquire the condition, make a change, and notify one, all, or a subset of threads waiting on the condition that something has changed. The waiting thread can then wake-up (be scheduled by the operating system), re-acquire the condition (mutex), perform checks on any changed state and perform required actions.

This highlights that a condition makes use of a mutex internally (to acquire/release the condition), but it also offers additional features such as allowing threads to wait on the condition and to allow threads to notify other threads waiting on the condition.

Now that we know what a condition is, let’s look at how we might use it in Python.

How to Use a Condition Object

Python provides a condition via the threading.Condition class.

We can create a condition object and by default it will create a new reentrant mutex lock (threading.RLock class) by default which will be used internally.

|

1 2 3 |

... # create a new condition condition = threading.Condition() |

We may have a reentrant mutex or a non-reentrant mutex that we wish to reuse in the condition for some good reason, in which case we can provide it to the constructor.

I don’t recommend this unless you know your use case has this requirement. The chance of getting into trouble is high.

|

1 2 3 |

... # create a new condition with a custom lock condition = threading.Condition(lock=my_lock) |

In order for a thread to make use of the condition, it must acquire it and release it, like a mutex lock.

This can be achieved manually with the acquire() and release() functions.

For example, we can acquire the condition and then wait on the condition to be notified and finally release the condition as follows:

|

1 2 3 4 5 6 7 |

... # acquire the condition condition.acquire() # wait to be notified condition.wait() # release the condition condition.release() |

An alternative to calling the acquire() and release() functions directly is to use the context manager, which will perform the acquire/release automatically for us, for example:

|

1 2 3 4 5 |

... # acquire the condition with condition: # wait to be notified condition.wait() |

We also must acquire the condition in a thread if we wish to notify waiting threads. This too can be achieved directly with the acquire/release function calls or via the context manager.

We can notify a single waiting thread via the notify() function.

For example:

|

1 2 3 4 5 |

... # acquire the condition with condition: # notify a waiting thread condition.notify() |

The notified thread will stop-blocking as soon as it can re-acquire the mutex within the condition. This will be attempted automatically as part of its call to wait(), you do not need to do anything extra.

If there are more than one thread waiting on the condition, we will not know which thread will be notified.

We can notify all threads waiting on the condition via the notify_all() function.

|

1 2 3 4 5 |

... # acquire the condition with condition: # notify all threads waiting on the condition condition.notify_all() |

Now that we know how to use the threading.Condition class, let’s look at some worked examples.

Example of Wait and Notify With a Condition

We will explore using a threading.Condition to notify a waiting thread that something has happened.

We will use a new threading.Thread instance to prepare some data and notify a waiting thread, and in the main thread we will kick-off the new thread and use the condition to wait for the work to be completed.

First, we will define a target task function to execute in a new thread.

The function will take the condition object and a list in which it can deposit data. The function will block for a moment, add data to the list, then notify the waiting thread.

The complete target task function is listed below.

|

1 2 3 4 5 6 7 8 9 10 |

# target function to prepare some work def task(condition, work_list): # block for a moment sleep(1) # add data to the work list work_list.append(33) # notify a waiting thread that the work is done print('Thread sending notification...') with condition: condition.notify() |

In the main thread, first we can create the condition and a list in which we can place data.

|

1 2 3 4 5 |

... # create a condition condition = Condition() # prepare the work list work_list = list() |

Next, we can start a new thread calling our target task function and wait to be notified of the result.

|

1 2 3 4 5 6 7 8 9 |

... # wait to be notified that the data is ready print('Main thread waiting for data...') with condition: # start a new thread to perform some work worker = Thread(target=task, args=(condition, work_list)) worker.start() # wait to be notified condition.wait() |

Note, we must start the new thread after we have acquired the mutex lock in the condition in this example.

If we did not acquire the lock first, it is possible that there would be a race condition. Specifically, if we started the new thread before acquiring the condition and waiting in the main thread, then it is possible for the new thread to execute and notify before the main thread has had a chance to start waiting. In which case the main thread would wait forever to be notified.

Finally, we can report the data once it is available.

|

1 2 3 |

... # we know the data is ready print(f'Got data: {work_list}') |

Tying this together, the complete example is listed below.

|