Last Updated on September 12, 2022

The ThreadPoolExecutor class in Python can be used to download and analyze multiple Hacker News articles at the same time.

It can be interesting to analyze the factors of articles that battled their way to the front page of social news websites like Hacker News (news.ycombinator.com). One aspect to look at is the length of articles, such as the minimum, maximum, and average article length.

We can develop a program to download all articles linked on the home page of Hacker News and calculate word count statistics. This is slow. If each article takes 1 second to download, then the 30 articles on the homepage will take half a minute to download for analysis.

We can use a ThreadPoolExecutor to perform all downloads concurrently, shortening the entire process to the time it takes to download one article. This analysis can be extended, where a second thread pool can be used to download and extract links from each page of Hacker News articles.

In this tutorial, you will discover how to develop a program to analyze multiple articles listed on Hacker News concurrently using thread pools.

After completing this tutorial, you will know:

- How to develop a serial program to download and analyze the word count of articles listed on Hacker News.

- How to use the ThreadPoolExecutor in Python to create and manage pools of worker threads for IO-bound tasks.

- How to use ThreadPoolExecutor to download and analyze 30 to 90 Hacker News articles concurrently.

Let’s dive in.

Analysis of HackerNews Front-Page Articles

Hacker news is a technology news website, mostly for developers interested in highly technical topics and business.

Our goal is to summarize the word count or length of articles posted to Hacker News.

This might be interesting if we think that article length is one important factor for articles to make it onto the front page of Hacker News.

The website is available at:

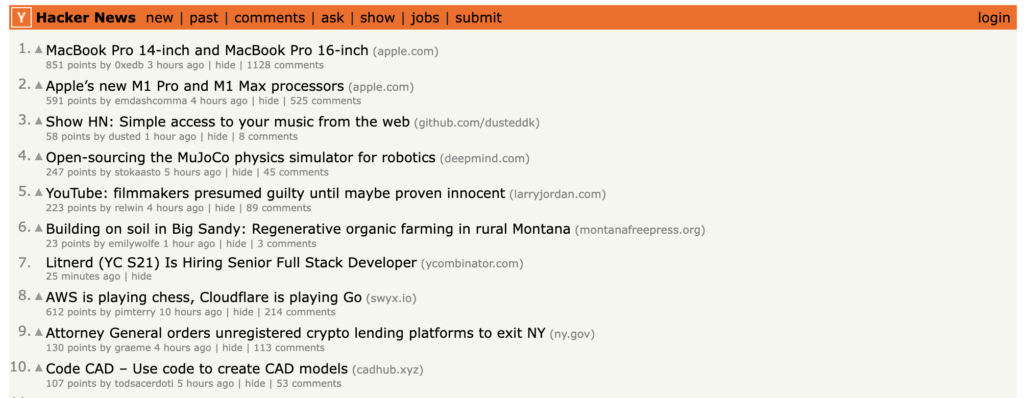

Below is a screenshot of what the site looks like at the time of writing (it has not changed noticeably in a decade).

We can divide this task up into a few distinct pieces.

- Download and Parse HTML Documents.

- Download and Extract Links From Hacker News.

- Download and Calculate Word Count of Articles.

- Report Results.

- Complete Example.

Download and Parse HTML Documents

The first step is to develop code to download and parse HTML documents.

We will need this to extract links from the Hacker News home page and to extract text from linked articles.

The HTML can then be parsed and we can extract all links to articles.

We can use the urlopen() function from the urllib.request module to open a connection to a webpage and download it.

The safe way to do this is with the context manager so that the connection is closed once we download the webpage into memory.

|

1 2 3 4 5 |

... # open a connection to the server with urlopen(urlpath) as connection: # read the contents of the html doc data = connection.read() |

We may be downloading all kinds of URLs; some might be unreachable or very slow to respond.

We can address this by setting a timeout for the connection of a few seconds — three in this case. We can also wrap the entire operation in a try...except in case we get a timeout or some other kind of error downloading the URL.

|

1 2 3 4 5 6 7 8 9 |

... try: # open a connection to the server with urlopen(urlpath, timeout=3) as connection: # read the contents of the html doc data = connection.read() # ... except: # bad url, socket timeout, http forbidden, etc. |

Next, we can parse the HTML in the document.

I recommend the BeautifulSoup Python library anytime HTML documents need to be parsed.

If you’re new to BeautifulSoup, you can install it easily with your Python package manager, such as pip:

|

1 |

pip install beautifulsoup4 |

First, we must decode the raw data downloaded into ASCII text.

This can be achieved by calling the decode() function on the string of raw data and specifying a standard text format, such as UTF-8.

|

1 2 3 |

... # decode the provided content as ascii text html = content.decode('utf-8') |

Next, we can parse the text of the HTML document using BeautifulSoup with the default parser.

It is a good idea to use a more sophisticated parser that works the same on all platforms, but in this case, will use the default parser as it does not require you to install anything extra.

|

1 2 3 |

... # parse the document as best we can soup = BeautifulSoup(html, 'html.parser') |

We can tie all this together into a function named download_and_parse_url() that will take the URL as an argument, then attempt to download and return the parsed HTML document or None if there is an error.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

# download html and return parsed doc or None on error def download_and_parse_url(urlpath): try: # open a connection to the server with urlopen(urlpath, timeout=3) as connection: # read the contents of the html doc data = connection.read() # decode the content as ascii txt html = data.decode('utf-8') # parse the content as html return BeautifulSoup(html, features="html.parser") except: # bad url, socket timeout, http forbidden, etc. return None |

We will use this function to download the Hacker News home page and to download articles listed on the homepage.

Download and Extract Links From Hacker News

Next, we need to extract all of the URLs from the Hacker News home page.

The Beautiful Soup library provides a find_all() function that we can use to locate all <a> HTML tags that contain links. We can then use the get() function on each tag to retrieve the content of the html="..." property.

For example:

|

1 2 3 4 5 6 7 |

... # download and parse the doc soup = download_and_parse_url(url) # get all <a> tags atags = soup.find_all('a') # get value of href property on all <a tags links = [tag.get('href') for tag in atags] |

The problem is that we don’t want all links on the Hacker News webpage, only the article links.

We can inspect the source code of the Hacker News home page and check if article links have any special markup.

You can do this in your web browser by right clicking on the web page and selecting “View Page Source” or the equivalent for your browser.

At the time of writing, the layout appears to be an HTML table with article links marked up with CSS class "titlelink"; for example:

|

1 |

<a href="https://www.apple.com/macbook-pro-14-and-16/" class="titlelink">MacBook Pro 14-inch and MacBook Pro 16-inch</a> |

Therefore, we can select all <a> HTML tags that have this class property.

|

1 2 3 |

... # get all <a> tags with class='titlelink' atags = soup.find_all('a', {'class': 'titlelink'}) |

Note: the specific CSS class and layout of the Hacker News homepage may change after the time of writing. This will break the example. If this happens, let me know in the comments and I will update the tutorial.

We can tie this together into a function named get_hn_article_urls() that will download the Hacker News home page, parse the HTML, then extract all article links, which are returned as a list.

|

1 2 3 4 5 6 7 8 9 10 11 |

# get all article urls listed on a hacker news html webpage def get_hn_article_urls(url): # download and parse the doc soup = download_and_parse_url(url) # it's possible we failed to download the page if soup is None: return list() # get all <a> tags with class='titlelink' atags = soup.find_all('a', {'class': 'titlelink'}) # get value of href property on all <a tags return [tag.get('href') for tag in atags] |

Let’s test this function to confirm it does what we expect.

The example below will extract and print all links on the Hacker News homepage.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

# SuperFastPython.com # get all articles listed on hacker news from urllib.request import urlopen from bs4 import BeautifulSoup # download html and return parsed doc or None on error def download_and_parse_url(urlpath): try: # open a connection to the server with urlopen(urlpath, timeout=3) as connection: # read the contents of the html doc data = connection.read() # decode the content as ascii txt html = data.decode('utf-8') # parse the content as html return BeautifulSoup(html, features="html.parser") except: # bad url, socket timeout, http forbidden, etc. return None # get all article urls listed on a hacker news html webpage def get_hn_article_urls(url): # download and parse the doc soup = download_and_parse_url(url) # it's possible we failed to download the page if soup is None: return [] # get all <a> tags with class='titlelink' atags = soup.find_all('a', {'class': 'titlelink'}) # get value of href property on all <a tags return [tag.get('href') for tag in atags] # entry point URL = 'https://news.ycombinator.com/' # calculate statistics links = get_hn_article_urls(URL) # list all retrieved links for link in links: print(link) |

Running the example prints all of the links extracted from the Hacker News webpage.

By default, the home page displays 30 links (at the time of writing) so we should see 30 links printed out.

Below is the output from running the script at the time of writing.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

https://www.apple.com/macbook-pro-14-and-16/ https://www.apple.com/newsroom/2021/10/introducing-m1-pro-and-m1-max-the-most-powerful-chips-apple-has-ever-built/ https://github.com/DusteDdk/dstream https://montanafreepress.org/2021/10/14/building-on-soil-in-big-sandy-regenerative-organic-agriculture/ https://www.nature.com/articles/d41586-021-02812-z https://deepmind.com/blog/announcements/mujoco https://ambition.com/careers/ https://larryjordan.com/articles/youtube-filmmakers-presumed-guilty-until-maybe-proven-innocent/ https://www.swyx.io/cloudflare-go/ https://onlyrss.org/posts/my-rowing-tips-after-15-million-meters.html https://www.cambridge.org/core/journals/episteme/article/abs/conspiracy-theories-and-religion-reframing-conspiracy-theories-as-bliks/5C6A020BDEEC2BFB3189120A15CFCB73 https://cadhub.xyz/u/caterpillar/moving_fish/ide https://firecracker-microvm.github.io/ https://ag.ny.gov/press-release/2021/attorney-general-james-directs-unregistered-crypto-lending-platforms-cease https://en.wikipedia.org/wiki/The_Toynbee_Convector https://danluu.com/learn-what/ https://devlog.hexops.com/2021/mach-engine-the-future-of-graphics-with-zig https://sdowney.org/index.php/2021/10/03/stdexecution-sender-receiver-and-the-continuation-monad/ https://sequoia-pgp.org/blog/2021/10/18/202110-sequoia-pgp-is-now-lgpl-2.0/ https://www.lesswrong.com/posts/MnFqyPLqbiKL8nSR7/my-experience-at-and-around-miri-and-cfar-inspired-by-zoe https://github.com/OpenMW/openmw https://dionhaefner.github.io/2021/09/bayesian-histograms-for-rare-event-classification/ https://adamtooze.com/2021/10/12/chartbook-45-of-scarface-the-nobel-the-double-life-of-mariel/ https://danilowoz.com/blog/spatial-keyboard-navigation https://sixfold.medium.com/bringing-kafka-based-architecture-to-the-next-level-using-simple-postgresql-tables-415f1ff6076d https://www.npopov.com/2021/10/13/How-opcache-works.html https://github.com/aramperes/onetun https://twitter.com/DuckDuckGo/status/1447559362906447874 https://www.sec.gov/Archives/edgar/data/1476840/000162828021020115/expensifys-1.htm https://sethmlarson.dev/blog/2021-10-18/tests-arent-enough-case-study-after-adding-types-to-urllib3 |

So far, so good.

Next, we need to count the number of words in each linked article.

Download and Calculate Word Count of Articles

Each article linked on the Hacker News homepage must be first downloaded and parsed.

We can achieve this by calling the download_and_parse_url() function we developed above.

|

1 2 3 |

... # download and parse the doc soup = download_and_parse_url(url) |

We can then extract all text from the document by calling get_text(). This function will return a string with all text from the HTML document. It’s not perfect, but good enough for a rough approximation.

|

1 2 3 |

... # get all text (this is a pretty rough approximation) txt = soup.get_text() |

We can then split the text into “words,” really just tokens separated by white space, and count the total number of words.

|

1 2 3 4 5 |

... # split into tokens/words words = txt.split() # return number of tokens count = len(words) |

The url_word_count() function below ties this together taking a URL as an argument and returning a tuple of the word count and provided URL.

A word count of None is returned if the URL cannot be downloaded and parsed.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# calculate the word count for a downloaded html webpage def url_word_count(url): # download and parse the doc soup = download_and_parse_url(url) # check for a failure to download the url if soup is None: return (None, url) # get all text (this is a pretty rough approximation) txt = soup.get_text() # split into tokens/words words = txt.split() # return number of tokens return (len(words), url) |

We now know how to prepare all of the data required; all that is left is to perform an analysis.

Report Results

We have a list of links extracted from the Hacker News home page; now we can call url_word_count() from the previous section to get the word count for each link.

This data can be used as the basis for creating a simple report.

Will first filter out all word counts for links that could not be downloaded.

|

1 2 3 |

... # filter out any articles were a word count could not be calculated results = [(count,url) for count,url in results if count is not None] |

It would be nice to list each URL with its word count, ordered by word count in descending order.

First, we can sort the list of word count and URL tuples.

|

1 2 3 |

... # sort results by word count results.sort(key=lambda t: t[0]) |

Then, print each entry in the list with some formatting so that everything lines up nicely.

|

1 2 3 4 |

... # report word count for all linked articles for word_count, url in results: print(f'{word_count:6d} words\t{url}') |

It would also be nice to report the total number of articles where a word count could be calculated compared to the total number of links on the Hacker News homepage.

|

1 2 3 |

... # summarize the entire process print(f'\nResults from {len(results)} articles out of {len(links)} links.') |

Finally, we can report the mean and standard deviation of the word counts.

We will further filter word counts so that these summary statistics only take into account articles with more than 100 words (e.g. tweets and YouTube videos might drop out).

|

1 2 3 4 5 6 |

... # trim word counts to docs with more than 100 words counts = [count for count,_ in results if count > 100] # calculate stats mu, sigma = mean(counts), stdev(counts) print(f'Mean Word Count: {mu:.1f} ({sigma:.1f}) words') |

The report_results() function below implements this taking the list of extracted links and list of tuple word counts as arguments.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# perform the analysis on the results and report def report_results(links, results): # filter out any articles were a word count could not be calculated results = [(count,url) for count,url in results if count is not None] # sort results by word count results.sort(key=lambda t: t[0]) # report word count for all linked articles for word_count, url in results: print(f'{word_count:6d} words\t{url}') # summarize the entire process print(f'\nResults from {len(results)} articles out of {len(links)} links.') # trim word counts to docs with more than 100 words counts = [count for count,_ in results if count > 100] # calculate stats mu, sigma = mean(counts), stdev(counts) print(f'Mean Word Count: {mu:.1f} ({sigma:.1f}) words') |

That’s about it.

Complete Example

We can tie together all of these elements into a complete example.

We need a driver for the process. The analyze_hn_articles() function performs this role, taking the URL for the Hacker News homepage, extracting all links, using a list comprehension to apply our url_word_count() function to each link, and then reporting the results.

|

1 2 3 4 5 6 7 8 |

# download a hacker news page and analyze word count on urls def analyze_hn_articles(url): # extract all the story links from the page links = get_hn_article_urls(url) # calculate statistics for all pages serially results = [url_word_count(link) for link in links] # report results report_results(links, results) |

We can then call this function from our entry point with the URL of the homepage.

|

1 2 3 4 5 |

... # entry point URL = 'https://news.ycombinator.com/' # calculate statistics analyze_hn_articles(URL) |

The complete example of analyzing all articles posted to the front page of Hacker News is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 |

# SuperFastPython.com # word count for all links on the first page of hacker news from statistics import mean from statistics import stdev from urllib.request import urlopen from bs4 import BeautifulSoup # download html and return parsed doc or None on error def download_and_parse_url(urlpath): try: # open a connection to the server with urlopen(urlpath, timeout=3) as connection: # read the contents of the html doc data = connection.read() # decode the content as ascii txt html = data.decode('utf-8') # parse the content as html return BeautifulSoup(html, features="html.parser") except: # bad url, socket timeout, http forbidden, etc. return None # get all article urls listed on a hacker news html webpage def get_hn_article_urls(url): # download and parse the doc soup = download_and_parse_url(url) # it's possible we failed to download the page if soup is None: return [] # get all <a> tags with class='titlelink' atags = soup.find_all('a', {'class': 'titlelink'}) # get value of href property on all <a tags return [tag.get('href') for tag in atags] # calculate the word count for a downloaded html webpage def url_word_count(url): # download and parse the doc soup = download_and_parse_url(url) # check for a failure to download the url if soup is None: return (None, url) # get all text (this is a pretty rough approximation) txt = soup.get_text() # split into tokens/words words = txt.split() # return number of tokens return (len(words), url) # perform the analysis on the results and report def report_results(links, results): # filter out any articles where a word count could not be calculated results = [(count,url) for count,url in results if count is not None] # sort results by word count results.sort(key=lambda t: t[0]) # report word count for all linked articles for word_count, url in results: print(f'{word_count:6d} words\t{url}') # summarize the entire process print(f'\nResults from {len(results)} articles out of {len(links)} links.') # trim word counts to docs with more than 100 words counts = [count for count,_ in results if count > 100] # calculate stats mu, sigma = mean(counts), stdev(counts) print(f'Mean Word Count: {mu:.1f} ({sigma:.1f}) words') # download a hacker news page and analyze word count on urls def analyze_hn_articles(url): # extract all the story links from the page links = get_hn_article_urls(url) # calculate statistics for all pages serially results = [url_word_count(link) for link in links] # report results report_results(links, results) # entry point URL = 'https://news.ycombinator.com/' # calculate statistics analyze_hn_articles(URL) |

Running the example first downloads and parses the HTML for the Hacker News homepage.

Links are extracted, then each is downloaded, parsed, and the text is extracted and words are counted.

Finally, the results are reported first listing all article URLs sorted by word count in ascending order followed by a statistical summary of the article length.

A listing of the results at the time of writing is provided below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

66 words https://twitter.com/DuckDuckGo/status/1447559362906447874 387 words https://cadhub.xyz/u/caterpillar/moving_fish/ide 415 words https://sequoia-pgp.org/blog/2021/10/18/202110-sequoia-pgp-is-now-lgpl-2.0/ 611 words https://ambition.com/careers/ 673 words https://danilowoz.com/blog/spatial-keyboard-navigation 688 words https://github.com/DusteDdk/dstream 1120 words https://deepmind.com/blog/announcements/mujoco 1188 words https://ag.ny.gov/press-release/2021/attorney-general-james-directs-unregistered-crypto-lending-platforms-cease 1245 words https://github.com/OpenMW/openmw 1291 words https://firecracker-microvm.github.io/ 1408 words https://github.com/aramperes/onetun 1475 words https://en.wikipedia.org/wiki/The_Toynbee_Convector 1500 words https://sdowney.org/index.php/2021/10/03/stdexecution-sender-receiver-and-the-continuation-monad/ 1639 words https://www.nature.com/articles/d41586-021-02812-z 1665 words https://www.swyx.io/cloudflare-go/ 1752 words https://onlyrss.org/posts/my-rowing-tips-after-15-million-meters.html 2173 words https://dionhaefner.github.io/2021/09/bayesian-histograms-for-rare-event-classification/ 2288 words https://www.cambridge.org/core/journals/episteme/article/abs/conspiracy-theories-and-religion-reframing-conspiracy-theories-as-bliks/5C6A020BDEEC2BFB3189120A15CFCB73 2882 words https://www.npopov.com/2021/10/13/How-opcache-works.html 3855 words https://larryjordan.com/articles/youtube-filmmakers-presumed-guilty-until-maybe-proven-innocent/ 4121 words https://www.apple.com/newsroom/2021/10/introducing-m1-pro-and-m1-max-the-most-powerful-chips-apple-has-ever-built/ 4138 words https://danluu.com/learn-what/ 6577 words https://www.apple.com/macbook-pro-14-and-16/ 6578 words https://montanafreepress.org/2021/10/14/building-on-soil-in-big-sandy-regenerative-organic-agriculture/ 42974 words https://www.lesswrong.com/posts/MnFqyPLqbiKL8nSR7/my-experience-at-and-around-miri-and-cfar-inspired-by-zoe Results from 25 articles out of 30 links. Mean Word Count: 3860.1 (8509.2) words |

We can see that only 25 of the 30 articles could be downloaded and parsed.

The results show that the smallest word count was 66 words, whereas the largest was 42,974.

The mean article length was about 3,800 with a standard deviation of about 8,500 skewed because of a very large piece of content. I also suspect the distribution is bell shaped (Gaussian), more likely a power law distribution.

The downside is that it takes a long time to run as each of the 30 articles must be downloaded and parsed sequentially. If it takes one second to download and process each article, then the whole process might take up to 30 seconds. In my case, it took about 20 seconds to complete.

We can speed up this process by downloading and processing the articles in parallel with the ThreadPoolExecutor.

First, let’s take a closer look at the ThreadPoolExecutor.

Run loops using all CPUs, download your FREE book to learn how.

How to Create a Pool of Worker Threads With ThreadPoolExecutor

We can use the ThreadPoolExecutor class to speed up the download of articles listed on Hacker News.

The ThreadPoolExecutor class is provided as part of the concurrent.futures module for easily running concurrent tasks.

The ThreadPoolExecutor provides a pool of worker threads, which is different from the ProcessPoolExecutor that provides a pool of worker processes.

Generally, ThreadPoolExecutor should be used for concurrent IO-bound tasks, like downloading URLs, and the ProcessPoolExecutor should be used for concurrent CPU-bound tasks, like calculating.

Using the ThreadPoolExecutor was designed to be easy and straightforward. It is like the “automatic mode” for Python threads.

- Create the thread pool by calling ThreadPoolExecutor().

- Submit tasks and get futures by calling submit() or map.

- Wait and get results as tasks complete by calling as_completed().

- Shut down the thread pool by calling shutdown()

Create the Thread Pool

First, a ThreadPoolExecutor instance must be created.

By default, it will create a pool of threads that is equal to the number of logical CPU cores in your system plus four.

This is good for most purposes.

|

1 2 3 |

... # create a thread pool with the default number of worker threads pool = ThreadPoolExecutor() |

You can run tens to hundreds of concurrent IO-bound threads per CPU, although perhaps not thousands or tens of thousands.

You can specify the number of threads to create in the pool via the max_workers argument; for example:

|

1 2 3 |

... # create a thread pool with 10 worker threads pool = ThreadPoolExecutor(max_workers=10) |

Submit Tasks to the Thread Pool

Once created, we can send tasks into the pool to be completed using the submit() function.

This function takes the name of the function to call any and all arguments and returns a Future object.

The Future object is a promise to return the results from the task (if any) and provides a way to determine if a specific task has been completed or not.

|

1 2 3 |

... # submit a task future = pool.submit(my_task, arg1, arg2, ...) |

The return from a function executed by the thread pool can be accessed via the result() function on the Future object. It will wait until the result is available, if needed, or return immediately if the result is available.

For example:

|

1 2 3 |

... # get the result from a future result = future.result() |

The ThreadPoolExecutor also provides a concurrent version of the built-in map() function.

It works in the same way as the built-in map() function, taking the name of a function and an iterable. It will then apply the function to each item in the iterable. It then returns an iterable over the results returned from the target function.

Unlike the built-in map() function, the function calls are performed concurrently rather than sequentially.

A common idiom when using the map() function is to iterate over the results returned. We can use the same idiom with the concurrent version of the map() function on the thread pool, for example:

|

1 2 3 4 |

... # perform all tasks in parallel for result in pool.map(task, items): # ... |

Get Results as Tasks Complete

The beauty of performing tasks concurrently is that we can get results as they become available rather than waiting for tasks to be completed in the order they were submitted.

The concurrent.futures module provides an as_completed() function that we can use to get results for tasks as they are completed, just like its name suggests.

We can call the function and provide it a list of future objects created by calling submit()and it will return future objects as they are completed in whatever order.

For example, we can use a list comprehension to submit the tasks and create the list of future objects:

|

1 2 3 |

... # submit all tasks into the thread pool and create a list of futures futures = [pool.submit(my_func, task) for task in tasks] |

Then get results for tasks as they complete in a for loop:

|

1 2 3 4 5 6 |

... # iterate over all submitted tasks and get results as they are available for future in as_completed(futures): # get the result result = future.result() # do something with the result... |

Shutdown the Thread Pool

Once all tasks are completed, we can close down the thread pool, which will release each thread and any resources it may hold (e.g. the stack space).

|

1 2 3 |

... # shutdown the thread pool pool.shutdown() |

An easier way to use the thread pool is via the context manager (the with keyword), which ensures it is closed automatically once we are finished with it.

|

1 2 3 4 5 6 7 8 9 10 |

... # create a thread pool with ThreadPoolExecutor(max_workers=10) as pool: # submit tasks futures = [pool.submit(my_func, task) for task in tasks] # get results as they are available for future in as_completed(futures): # get the result result = future.result() # do something with the result... |

Now that we are familiar with ThreadPoolExecutor and how to use it, let’s look at how we can adapt our program for analyzing Hacker News article links to make use of it.

Analyze Multiple Articles Concurrently With the ThreadPoolExecutor

Our program for analyzing articles linked on Hacker News can be updated to download articles concurrently.

The slow part of the program is downloading each article listed on Hacker News. This is a type of task referred to as IO-blocking or IO-bound. It is a natural case for using concurrent Python threads.

Each task of downloading and analyzing a URL is independent, so we can use threads directly, such as one for each call to the url_word_count() function for a URL.

With a total of 30 links on the homepage, this would be 30 concurrent threads, which is quite modest for most systems for blocking IO tasks.

The change requires that the analyze_hn_articles() function be updated.

Here is the function again, identical from the previous section.

|

1 2 3 4 5 6 7 8 |

# download a hacker news page and analyze word count on urls def analyze_hn_articles(url): # extract all the story links from the page links = get_hn_article_urls(url) # calculate statistics for all pages serially results = [url_word_count(link) for link in links] # report results report_results(links, results) |

The list comprehension for calling url_word_count() for each link can be replaced with a call to the ThreadPoolExecutor.

We can call the map() function on the ThreadPoolExecutor, which will apply the url_word_count() for each link in the list links.

The map() function is preferred in this case over submit() as we must wait for all tasks to complete before moving on and preparing the summary report. Recall, we need all word counts before we can create the report.

|

1 2 3 4 |

... # calculate statistics for all pages in parallel with ThreadPoolExecutor(len(links)) as executor: results = executor.map(url_word_count, links) |

Alternatively, we could use the submit() function, which will return one Future object for each call to url_word_count(). We could then wait for all Future objects to complete, then retrieve the result from each in turn. This would be functionally the same, but require additional lines of code.

The updated version of the analyze_hn_articles() function that makes use of a ThreadPoolExecutor is listed below.

|

1 2 3 4 5 6 7 8 9 |

# download a hacker news page and analyze word count on urls def analyze_hn_articles(url): # extract all the story links from the page links = get_hn_article_urls(url) # calculate statistics for all pages in parallel with ThreadPoolExecutor(len(links)) as executor: results = executor.map(url_word_count, links) # report results report_results(links, results) |

Tying this together, the complete example is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 |

# SuperFastPython.com # word count for all links on the first page of hacker news from statistics import mean from statistics import stdev from urllib.request import urlopen from concurrent.futures import ThreadPoolExecutor from bs4 import BeautifulSoup # download html and return parsed doc or None on error def download_and_parse_url(urlpath): try: # open a connection to the server with urlopen(urlpath, timeout=3) as connection: # read the contents of the html doc data = connection.read() # decode the content as ascii txt html = data.decode('utf-8') # parse the content as html return BeautifulSoup(html, features="html.parser") except: # bad url, socket timeout, http forbidden, etc. return None # get all article urls listed on a hacker news html webpage def get_hn_article_urls(url): # download and parse the doc soup = download_and_parse_url(url) # it's possible we failed to download the page if soup is None: return [] # get all <a> tags with class='titlelink' atags = soup.find_all('a', {'class': 'titlelink'}) # get value of href property on all <a tags return [tag.get('href') for tag in atags] # calculate the word count for a downloaded html webpage def url_word_count(url): # download and parse the doc soup = download_and_parse_url(url) # check for a failure to download the url if soup is None: return (None, url) # get all text (this is a pretty rough approximation) txt = soup.get_text() # split into tokens/words words = txt.split() # return number of tokens return (len(words), url) # perform the analysis on the results and report def report_results(links, results): # filter out any articles where a word count could not be calculated results = [(count,url) for count,url in results if count is not None] # sort results by word count results.sort(key=lambda t: t[0]) # report word count for all linked articles for word_count, url in results: print(f'{word_count:6d} words\t{url}') # summarize the entire process print(f'\nResults from {len(results)} articles out of {len(links)} links.') # trim word counts to docs with more than 100 words counts = [count for count,_ in results if count > 100] # calculate stats mu, sigma = mean(counts), stdev(counts) print(f'Mean Word Count: {mu:.1f} ({sigma:.1f}) words') # download a hacker news page and analyze word count on urls def analyze_hn_articles(url): # extract all the story links from the page links = get_hn_article_urls(url) # calculate statistics for all pages in parallel with ThreadPoolExecutor(len(links)) as executor: results = executor.map(url_word_count, links) # report results report_results(links, results) # entry point URL = 'https://news.ycombinator.com/' # calculate statistics analyze_hn_articles(URL) |

Running the example creates a near identical report to the serial case in the previous section, as we would expect as it is the same code and same websites.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

66 words https://twitter.com/DuckDuckGo/status/1447559362906447874 387 words https://cadhub.xyz/u/caterpillar/moving_fish/ide 415 words https://sequoia-pgp.org/blog/2021/10/18/202110-sequoia-pgp-is-now-lgpl-2.0/ 611 words https://ambition.com/careers/ 673 words https://danilowoz.com/blog/spatial-keyboard-navigation 688 words https://github.com/DusteDdk/dstream 1120 words https://deepmind.com/blog/announcements/mujoco 1188 words https://ag.ny.gov/press-release/2021/attorney-general-james-directs-unregistered-crypto-lending-platforms-cease 1245 words https://github.com/OpenMW/openmw 1291 words https://firecracker-microvm.github.io/ 1408 words https://github.com/aramperes/onetun 1475 words https://en.wikipedia.org/wiki/The_Toynbee_Convector 1500 words https://sdowney.org/index.php/2021/10/03/stdexecution-sender-receiver-and-the-continuation-monad/ 1639 words https://www.nature.com/articles/d41586-021-02812-z 1665 words https://www.swyx.io/cloudflare-go/ 1752 words https://onlyrss.org/posts/my-rowing-tips-after-15-million-meters.html 2173 words https://dionhaefner.github.io/2021/09/bayesian-histograms-for-rare-event-classification/ 2288 words https://www.cambridge.org/core/journals/episteme/article/abs/conspiracy-theories-and-religion-reframing-conspiracy-theories-as-bliks/5C6A020BDEEC2BFB3189120A15CFCB73 2882 words https://www.npopov.com/2021/10/13/How-opcache-works.html 3855 words https://larryjordan.com/articles/youtube-filmmakers-presumed-guilty-until-maybe-proven-innocent/ 4121 words https://www.apple.com/newsroom/2021/10/introducing-m1-pro-and-m1-max-the-most-powerful-chips-apple-has-ever-built/ 4138 words https://danluu.com/learn-what/ 6577 words https://www.apple.com/macbook-pro-14-and-16/ 6578 words https://montanafreepress.org/2021/10/14/building-on-soil-in-big-sandy-regenerative-organic-agriculture/ 42974 words https://www.lesswrong.com/posts/MnFqyPLqbiKL8nSR7/my-experience-at-and-around-miri-and-cfar-inspired-by-zoe Results from 25 articles out of 30 links. Mean Word Count: 3860.1 (8509.2) words |

The main difference is the execution speed. In this case, it has dropped from nearly 20 seconds to a little over 4 seconds. That is about a 5x speedup.

How long did it take on your machine?

Let me know in the comments below.

Perhaps an analysis of only 30 articles is not enough. Maybe we could get better results with more linked articles.

Free Python ThreadPoolExecutor Course

Download your FREE ThreadPoolExecutor PDF cheat sheet and get BONUS access to my free 7-day crash course on the ThreadPoolExecutor API.

Discover how to use the ThreadPoolExecutor class including how to configure the number of workers and how to execute tasks asynchronously.

Two Thread Pools to Analyze Multiple Pages of Articles

There are many ways we could extend the analysis to include more article links from Hacker News.

For example, we could gather data over multiple hours and summarize the results for all unique links.

Perhaps a simpler approach is to go beyond the front page of the site and gather links from page 2 and 3.

For example, we can access the first three pages of linked articles as follows:

- Page 1: https://news.ycombinator.com/news?p=1

- Page 2: https://news.ycombinator.com/news?p=2

- Page 3: https://news.ycombinator.com/news?p=3

We can reuse all of the code from the previous section, but update it slightly to support multiple Hacker News pages from which to extract links.

Instead of calling get_hn_article_urls() once, we can call it once for each Hacker News page in our analysis.

Doing this serially might take one or two seconds per page, but we can also perform this task in parallel with a thread pool.

Each call to get_hn_article_urls() returns a list that we can add to a master list of all article links, which can then be processed by a second ThreadPoolExecutor later, as we did previously.

We could use the map() function to apply the get_hn_article_urls() function to each URL, but this would return an iterator over lists, which we would have to flatten.

For example:

|

1 2 3 4 5 6 |

... with ThreadPoolExecutor(len(urls)) as executor: # get a list of article links from each hacker news webpage links = executor.map(get_hn_article_urls, urls) # flatten list of lists of urls into list of urls links = [link for sublist in links for link in sublist] |

It might be cleaner to use submit() to get a Future object for each task, then get the list result from each call and add it to our master list.

|

1 2 3 4 5 6 7 8 9 |

... # get a list of article links from each hacker news web page links = [] with ThreadPoolExecutor(len(urls)) as executor: # submit each task on a new thread futures = [executor.submit(get_hn_article_urls, url) for url in urls] # get list of articles from each page for future in futures: links += future.result() |

Functionally, they are the same, but the second case feels like a better fit.

The updated version of the analyze_hn_articles() that makes use of the two thread pools is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# download a hacker news page and analyze word count on urls def analyze_hn_articles(urls): # get a list of article links from each hacker news webpage links = [] with ThreadPoolExecutor(len(urls)) as executor: # submit each task on a new thread futures = [executor.submit(get_hn_article_urls, url) for url in urls] # get list of articles from each page for future in futures: links += future.result() # calculate statistics for all pages in parallel with ThreadPoolExecutor(len(links)) as executor: results = executor.map(url_word_count, links) # report results report_results(links, results) |

We can then call this function with a list of hacker news URLs.

|

1 2 3 4 5 6 7 |

... # entry point URLS = [ 'https://news.ycombinator.com/news?p=1', 'https://news.ycombinator.com/news?p=2', 'https://news.ycombinator.com/news?p=3'] # calculate statistics analyze_hn_articles(URLS) |

This is one of many ways we could structure the solution.

Tying this together, the complete example of extracting article links from multiple Hacker News pages and performing a word count analysis on the results is listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 |

# SuperFastPython.com # word count for all links on the first few pages of hacker news from statistics import mean from statistics import stdev from urllib.request import urlopen from concurrent.futures import ThreadPoolExecutor from bs4 import BeautifulSoup # download html and return parsed doc or None on error def download_and_parse_url(urlpath): try: # open a connection to the server with urlopen(urlpath, timeout=3) as connection: # read the contents of the html doc data = connection.read() # decode the content as ascii txt html = data.decode('utf-8') # parse the content as html return BeautifulSoup(html, features="html.parser") except: # bad url, socket timeout, http forbidden, etc. return None # get all article urls listed on a hacker news html webpage def get_hn_article_urls(url): # download and parse the doc soup = download_and_parse_url(url) # it's possible we failed to download the page if soup is None: return [] # get all <a> tags with class='titlelink' atags = soup.find_all('a', {'class': 'titlelink'}) # get value of href property on all <a tags return [tag.get('href') for tag in atags] # calculate the word count for a downloaded html webpage def url_word_count(url): # download and parse the doc soup = download_and_parse_url(url) # check for a failure to download the url if soup is None: return (None, url) # get all text (this is a pretty rough approximation) txt = soup.get_text() # split into tokens/words words = txt.split() # return number of tokens return (len(words), url) # perform the analysis on the results and report def report_results(links, results): # filter out any articles where a word count could not be calculated results = [(count,url) for count,url in results if count is not None] # sort results by word count results.sort(key=lambda t: t[0]) # report word count for all linked articles for word_count, url in results: print(f'{word_count:6d} words\t{url}') # summarize the entire process print(f'\nResults from {len(results)} articles out of {len(links)} links.') # trim word counts to docs with more than 100 words counts = [count for count,_ in results if count > 100] # calculate stats mu, sigma = mean(counts), stdev(counts) print(f'Mean Word Count: {mu:.1f} ({sigma:.1f}) words') # download a hacker news page and analyze word count on urls def analyze_hn_articles(urls): # get a list of article links from each hacker news web page links = [] with ThreadPoolExecutor(len(urls)) as executor: # submit each task on a new thread futures = [executor.submit(get_hn_article_urls, url) for url in urls] # get list of articles from each page for future in futures: links += future.result() # calculate statistics for all pages in parallel with ThreadPoolExecutor(len(links)) as executor: results = executor.map(url_word_count, links) # report results report_results(links, results) # entry point URLS = [ 'https://news.ycombinator.com/news?p=1', 'https://news.ycombinator.com/news?p=2', 'https://news.ycombinator.com/news?p=3'] # calculate statistics analyze_hn_articles(URLS) |

Running the example produces a similar report to previous sections with about three times more links listed as we have retrieved article links from the first three pages of Hacker News.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 |

4 words https://sleepy-meadow-72878.herokuapp.com/ 17 words https://www.youtube.com/watch?v=exM1uajp--A 66 words https://twitter.com/DuckDuckGo/status/1447559362906447874 66 words https://twitter.com/stevesi/status/1449443506783543297 66 words https://twitter.com/marcan42/status/1450163929993269249 66 words https://twitter.com/TwitterEng/status/1450179475426066433 245 words https://xkcd.com/927/ 278 words https://github.blog/changelog/2021-09-30-deprecation-notice-recover-accounts-elsewhere/ 279 words http://jsoftware.com/pipermail/programming/2021-October/059091.html 288 words https://l0phtcrack.gitlab.io/ 317 words https://bztsrc.gitlab.io/usbimager/ 370 words https://seashells.io/ 387 words https://cadhub.xyz/u/caterpillar/moving_fish/ide 415 words https://sequoia-pgp.org/blog/2021/10/18/202110-sequoia-pgp-is-now-lgpl-2.0/ 467 words https://neverworkintheory.org/2021/10/18/bad-practices-in-continuous-integration.html 498 words https://www.win.tue.nl/~vanwijk/myriahedral/ 512 words https://raumet.com/framework 574 words https://www.tomshardware.com/news/amd-memory-encryption-disabled-in-linux 611 words https://ambition.com/careers/ 673 words https://danilowoz.com/blog/spatial-keyboard-navigation 673 words https://www.theverge.com/2021/10/18/22733119/apple-new-macbook-pro-magsafe-back 679 words https://www.infoworld.com/article/3637073/python-stands-to-lose-its-gil-and-gain-a-lot-of-speed.html 688 words https://github.com/DusteDdk/dstream 690 words https://github.com/SimonBrazell/privacy-redirect 697 words https://www.bbc.com/news/technology-58928706 700 words https://news.cornell.edu/stories/2021/09/dislike-button-would-improve-spotifys-recommendations 745 words https://www.nsf.gov/discoveries/disc_summ.jsp?cntn_id=303673 830 words https://www.cnbc.com/2021/10/18/the-wealthiest-10percent-of-americans-own-a-record-89percent-of-all-us-stocks.html 918 words https://www.ruetir.com/2021/10/18/airbnb-removes-more-than-three-quarters-of-advertisements-in-amsterdam-after-registration-obligation/ 1025 words https://www.bbc.com/news/business-58961836 1050 words https://thereader.mitpress.mit.edu/before-pong-there-was-computer-space/ 1099 words https://www.theguardian.com/uk-news/2021/oct/18/pm-urged-to-enact-davids-law-against-social-media-abuse-after-amesss-death 1100 words https://en.wikipedia.org/wiki/CityEl 1106 words https://quuxplusone.github.io/blog/2021/10/17/equals-delete-means/ 1110 words https://hai.stanford.edu/news/stanford-researchers-build-400-self-navigating-smart-cane 1120 words https://deepmind.com/blog/announcements/mujoco 1188 words https://ag.ny.gov/press-release/2021/attorney-general-james-directs-unregistered-crypto-lending-platforms-cease 1192 words http://manifold.systems/ 1200 words https://www.computerworld.com/article/3636788/windows-11-microsofts-pointless-update.html 1245 words https://github.com/OpenMW/openmw 1291 words https://firecracker-microvm.github.io/ 1330 words https://www.discovermagazine.com/mind/how-fonts-affect-learning-and-memory 1408 words https://github.com/aramperes/onetun 1475 words https://en.wikipedia.org/wiki/The_Toynbee_Convector 1500 words https://sdowney.org/index.php/2021/10/03/stdexecution-sender-receiver-and-the-continuation-monad/ 1513 words https://prospect.org/economy/powell-sold-more-than-million-dollars-of-stock-as-market-was-tanking/ 1556 words https://huggingface.co/bigscience/T0pp 1639 words https://www.nature.com/articles/d41586-021-02812-z 1653 words http://kvark.github.io/linux/framework/2021/10/17/framework-nixos.html 1665 words https://www.swyx.io/cloudflare-go/ 1709 words https://prideout.net/blog/undo_system/ 1752 words https://onlyrss.org/posts/my-rowing-tips-after-15-million-meters.html 2000 words https://foobarbecue.github.io/surfsonar/ 2064 words https://quillette.com/2021/10/17/my-father-teacher/ 2082 words https://sethmlarson.dev/blog/2021-10-18/tests-arent-enough-case-study-after-adding-types-to-urllib3 2173 words https://dionhaefner.github.io/2021/09/bayesian-histograms-for-rare-event-classification/ 2224 words https://www.sfgate.com/renotahoe/article/Lake-Tahoe-drought-water-rim-algae-16533020.php 2288 words https://www.cambridge.org/core/journals/episteme/article/abs/conspiracy-theories-and-religion-reframing-conspiracy-theories-as-bliks/5C6A020BDEEC2BFB3189120A15CFCB73 2757 words https://amd-now.com/amd-laptops-finally-reach-the-4k-screen-barrier-with-the-lenovo-thinkpad-t14s-gen-2-and-thinkpad-p14-gen-2/ 2882 words https://www.npopov.com/2021/10/13/How-opcache-works.html 3164 words https://ipfs.io/ipfs/QmNhFJjGcMPqpuYfxL62VVB9528NXqDNMFXiqN5bgFYiZ1/its-time-for-the-permanent-web.html 3432 words https://www.apple.com/newsroom/2021/10/introducing-the-next-generation-of-airpods/ 3670 words https://www.hakaimagazine.com/features/scooping-plastic-out-of-the-ocean-is-a-losing-game/ 3855 words https://larryjordan.com/articles/youtube-filmmakers-presumed-guilty-until-maybe-proven-innocent/ 4121 words https://www.apple.com/newsroom/2021/10/introducing-m1-pro-and-m1-max-the-most-powerful-chips-apple-has-ever-built/ 4138 words https://danluu.com/learn-what/ 4233 words https://scribe.rip/p/what-every-software-engineer-should-know-about-search-27d1df99f80d 4668 words https://www.wired.co.uk/article/cornwall-lithium 6577 words https://www.apple.com/macbook-pro-14-and-16/ 6578 words https://montanafreepress.org/2021/10/14/building-on-soil-in-big-sandy-regenerative-organic-agriculture/ 16372 words https://vorpus.org/blog/some-thoughts-on-asynchronous-api-design-in-a-post-asyncawait-world/ 28380 words https://www.doaks.org/resources/textiles/essays/evangelatou 42974 words https://www.lesswrong.com/posts/MnFqyPLqbiKL8nSR7/my-experience-at-and-around-miri-and-cfar-inspired-by-zoe Results from 73 articles out of 90 links. Mean Word Count: 2896.9 (6351.3) words |

The mean word count is lower at about 2,900, although there is a large post on “lesswrong.com” with nearly 43,000 words that is skewing the results.

An interesting alternative would be to set up a pipeline between the threads in the two thread pools. We could then push Hacker News URLs into the first thread pool; tasks in that pool could download and extract URLs and send individual URLs into the second thread pool, which would then output tuples of word counts and URLs.

Queues could be used to connect the elements of the pipeline. It would be a fun exercise, but perhaps a little overkill for this little application.

Overwhelmed by the python concurrency APIs?

Find relief, download my FREE Python Concurrency Mind Maps

Extensions

This section lists ideas for extending the tutorial.

- More Robust Extraction of URLs: Update the example to be robust to changes to the styling of the Hacker News page so that we can still extract article URLs if the CSS class is removed or changes.

- Separate Download From Parsing: Update the examples so that only the blocking IO is performed in another thread whereas the parsing can be performed in the main thread. Does it impact the overall execution speed?

- Use Queues to Feed Work Between Pools: Update the final example so that the first pool of workers passes individual links to the second pool for processing using queue objects.

Share your extensions in the comments below; it would be great to see what you come up with.

Further Reading

This section provides additional resources that you may find helpful.

Books

- ThreadPoolExecutor Jump-Start, Jason Brownlee, (my book!)

- Concurrent Futures API Interview Questions

- ThreadPoolExecutor Class API Cheat Sheet

I also recommend specific chapters from the following books:

- Effective Python, Brett Slatkin, 2019.

- See Chapter 7: Concurrency and Parallelism

- Python in a Nutshell, Alex Martelli, et al., 2017.

- See: Chapter: 14: Threads and Processes

Guides

- Python ThreadPoolExecutor: The Complete Guide

- Python ProcessPoolExecutor: The Complete Guide

- Python Threading: The Complete Guide

- Python ThreadPool: The Complete Guide

APIs

References

Takeaways

In this tutorial, you discovered how to analyze multiple articles listed on Hacker News concurrently using thread pools. You learned:

- How to develop a serial program to download and analyze the word count of articles listed on Hacker News.

- How to use the ThreadPoolExecutor in Python to create and manage pools of worker threads for IO-bound tasks.

- How to use ThreadPoolExecutor to download and analyze 30 to 90 Hacker News articles concurrently.

Do you have any questions?

Leave your question in a comment below and I will reply fast with my best advice.

Photo by Thomas Schweighofer on Unsplash

Leave a Reply